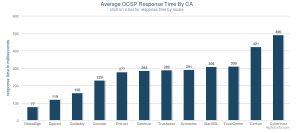

The other day I had a great conversation with Robert Duncan over at Netcraft, he showed me some reports they have made public about CRL and OCSP performance and uptime.

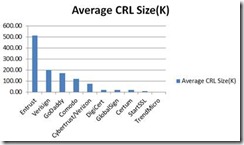

One thing that I have been meaning to do is to look at average CRL size across the various CAs in a more formal way I just never got around to doing it; conveniently one of the Netcraft reports though included a column for CRL size. So while I was waiting for a meeting to start I decided to figure out what the average sizes were; I focused my efforts on the same CAs I include in the revocation report, this is what I came up with:

| CA | Average CRL Size(K) | CRL Download Time @ 56k (s) |

| Entrust | 512.33 | 74.95 |

| Verisign | 200.04 | 29.26 |

| GoDaddy | 173.79 | 25.42 |

| Comodo | 120.75 | 17.66 |

| Cybertrust/Verizon | 75.00 | 10.97 |

| DigiCert | 21.66 | 3.17 |

| GlobalSign | 21.25 | 3.11 |

| Certum | 20.00 | 2.93 |

| StartSSL | 9.40 | 1.38 |

| TrendMicro | 1.00 | 0.15 |

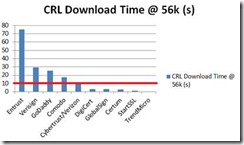

From this we can derive two charts one for size and another for download time at 56k (about 6% of internet users as of 2010):

|

|

I overlaid the red line at 10s because that is the timeout that most clients use to indicate when they will give up trying to download, some clients will continue trying in the background so that the next request would have the CRL already cached for the next call.

This threshold is very generous, after all what user is going to hang around for 10 seconds while a CRL is downloaded? This gets worse though the average chain is greater than 3 certificates per chain, two that need to have their status checked :/.

This is one of the reasons we have soft-fail revocation checking, until the Baseline Requirements were published inclusion of OCSP references was not mandatory and not every CA was managing their CRLs to be downloadable within that 10 second threshold.

There are a few ways CAs can manage their CRL sizes, one of the most common is simply roll new intermediate CAs when the CRL size gets unmanageable.

There is something you should understand about the data in the above charts; just because a CRL is published doesn’t mean it represents active certificates – this is one of the reasons I had put of doing this exercise because I wanted to exclude that case by cross-referencing the signing CA with crawler data to see if active certificates were associated with each CRL.

This would exclude the cases where a CA was taken out of operation and all of the associated certificates were revoked as a precautionary exercise – this can happen.

So why did I bother posting this then? It’s just a nice illustration as to why we cannot generally rely on CRLs as a form of revocation checking. In-fact this is very likely why some browsers do not bother trying to download CRLs.

All posts like this should end with a call to action (I need to do better about doing that), in this case I would say it is for CAs to review their revocation practices and how they make certificate status available to ensure it’s available in a fast and reliable manner.