This morning (September 3, 2025), someone posted an incident to the Mozilla dev-security-policy list that exposed a serious incident: “Incident Report: Mis-issued Certificates for SAN iPAddress: 1.1.1.1 by Fina RDC 2020.” An obscure CA, Fina RDC 2020, issued certificates containing the IP address 1.1.1.1, used by Cloudflare for encrypted DNS. These certificates should never have been issued.

Reference: https://groups.google.com/a/mozilla.org/g/dev-security-policy/c/SgwC1QsEpvc

Why this matters

1.1.1.1 is a critical bootstrap endpoint

This IP anchors encrypted DNS (DoH, DoT). A mis-issued certificate, combined with a BGP hijack, allows an attacker to intercept traffic before secure tunnels form. Some may argue that an attacker would also need to perform a BGP hijack to exploit such a certificate, but this is no real mitigation. BGP hijacks are a regular occurrence – often state-sponsored — and when combined with a valid certificate, they turn a routing incident into a full man-in-the-middle compromise. Cloudflare documentation:

- DoT: https://developers.cloudflare.com/1.1.1.1/encryption/dns-over-tls/

- DoH: https://developers.cloudflare.com/1.1.1.1/encryption/dns-over-https/

Mainstream browsers like Chrome and Firefox typically use the domain name cloudflare-dns.com when bootstrapping DoH/DoT, so they would ignore a certificate that only listed IP:1.1.1.1. However, both Google and Cloudflare also support direct IP endpoints (https://8.8.8.8/dns-query and https://1.1.1.1/dns-query). In these cases, an IP SAN certificate would validate just as Alex Radocea’s curl test showed: “subjectAltName: host “8.8.8.8” matched cert’s IP address!”.

The behavior differs slightly between providers:

- Google accepts raw-IP DoH connections and does not mandate a specific HTTP Host header.

- Cloudflare accepts raw-IP DoH connections but requires the Host: cloudflare-dns.com header.

This means raw-IP endpoints are real-world usage, not just theoretical. If a mis-issued IP SAN cert chains to a CA trusted by the platform (like in Microsoft’s store), exploitation becomes practical.

A Chronic Pattern of Mis-issuance

The incident report cites two mis-issued certs are still valid as of September 3 2025:

- https://crt.sh/?id=18603461241 (issued 2025-05; SAN includes 1.1.1.1)

- https://crt.sh/?id=19749721864 (issued 2025-05; SAN includes 1.1.1.1)

This was not an isolated event. A broader search of CT logs reveals a recurring pattern of these mis-issuances from the same CA, indicating a systemic problem.

Reference: https://crt.sh/?q=1.1.1.1

CT monitoring failed to trigger remediation

Cloudflare runs its own CT monitoring system: https://developers.cloudflare.com/ssl/ct/monitor/

If these certs surfaced in crt.sh, Cloudflare should have flagged them. Instead, there was no visible remediation.

What’s going wrong?

- Are IP SANs being monitored effectively enough to detect a pattern?

- Is there a proper pipeline from alerts to action?

- Why were these certs still valid after months?

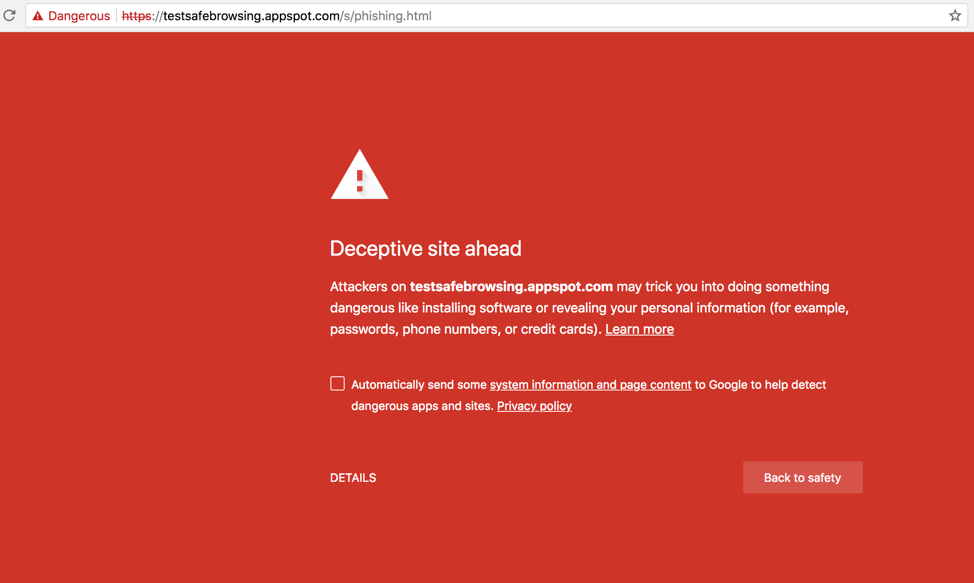

It is easy to focus on where CT monitoring fell short in this case, and clearly it did. But we should not lose sight of its value. Without CT, we would not have visibility into many of these mis-issuances, and we do not know how many attackers were dissuaded simply because their activity would be logged. CT is not perfect, but this incident reinforces why it is worth doubling down: improving implementations, making monitoring easier, and investing in features like static CT and verifiable indices that make it easier to use this fantastic resource.

What this means for users

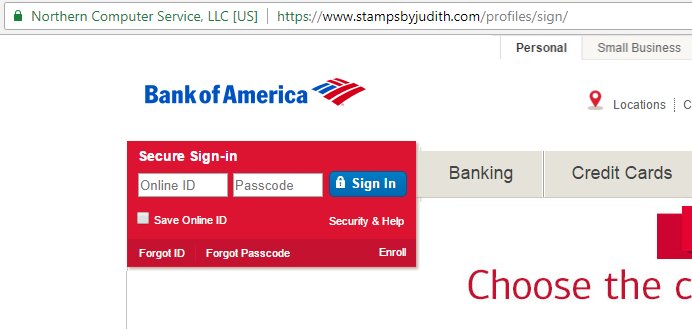

The impact is scoped to users of browsers and applications that rely on the Windows operating system’s root store, such as Microsoft Edge. Browsers like Chrome and Firefox, which manage their own root stores. However, because both Google and Cloudflare accept raw-IP DoH endpoints, the risk extends beyond pure edge cases.

For an affected user, at a public hotspot, if a BGP hijack redirects their traffic and the client connects directly to 1.1.1.1, their system checks the cert and sees a valid Cloudflare-like certificate. The attacker succeeds not just in breaking DNS, but in controlling secure web sessions.

This enables:

- Silent proxying of all traffic

- Token and session theft via impersonation

- Decryption of DoH queries (for raw-IP clients)

- In-flight alteration of pages and updates

This is the full man-in-the-middle playbook.

A tiny CA with outsized impact

Certificate issuance across the WebPKI is highly skewed, as of today:

| CA Owner | % of Unexpired Pre-Certificates |

| Internet Security Research Group | 46.52% |

| DigiCert | 22.19% |

| Sectigo | 11.89% |

| Google Trust Services | 8.88% |

| GoDaddy | 5.77% |

| Microsoft Corporation | 3.45% |

| IdenTrust | 0.63% |

| All others | <0.70% |

Refference: https://crt.sh/cert-populations and https://docs.google.com/spreadsheets/d/1gshICFyR6dtql-oB9uogKEvb2HarjEVLkrqGxYzb1C4/

Fina RDC 2020 has ~201 unexpired certs, accounting for <0.00002% of issuance. Yet Microsoft trusts it, while Chrome, Firefox, and Safari do not. That asymmetry leaves Edge and Windows users vulnerable.

Fina RDC 2020 is also listed in the EU Trusted List (EUTL) as authorized to issue Qualified Website Authentication Certificates (QWACs): https://eidas.ec.europa.eu/efda/trust-services/browse/eidas/tls/tl/HR/tsp/1/service/14. While no mainstream browsers currently import the EUTL for domain TLS, eIDAS 2.0 risks forcing them to. That means what is today a Microsoft-specific trust asymmetry could tomorrow be a regulatory mandate across all browsers.

Moreover, Microsoft requires that CAs provide “broad value to Windows customers and the internet community.”

Reference: https://learn.microsoft.com/en-us/security/trusted-root/program-requirements

Fina clearly does not meet that threshold.

Certificate lifespan context

The CA/Browser Forum (April 2025) approved ballot SC-081v3: TLS certificates will be limited to 47 days by March 2029:

- March 15 2026: max 200 days

- March 15 2027: max 100 days

- March 15 2029: max 47 days

IP SAN certs, however, are much shorter:

- Google Trust Services (via ACME) issues these certs with a maximum lifetime of 10 days: https://pki.goog/faq/

- Let’s Encrypt began issuing IP certs in July 2025, valid for ~6 days: https://letsencrypt.org/2025/07/01/issuing-our-first-ip-address-certificate and https://letsencrypt.org/2025/01/16/6-day-and-ip-certs

The missed opportunity: If short-lived IP certs were required by policy, this incident would have already expired, reducing exposure from months to days. Baseline Requirements currently do not differentiate IP SAN certs from DNS names. If they did, such exposure could be avoided.

On intent and attribution

Attribution in WebPKI failures is notoriously difficult. The noisy, repeated issuance pattern suggests this may have been a systemic accident rather than a targeted attack. Still, intent is irrelevant. A certificate that shouldn’t exist was issued and left valid for months. Once issued, there is no way to tell what a certificate and its key were used for. The governance failure is what matters.

From an attacker’s perspective, this is even more concerning. CT logs can be mined as a reconnaissance tool to identify the weakest CAs – those with a track record of mis-issuance. An adversary doesn’t need to compromise a major CA; they only need to find a small one with poor controls, like Fina RDC 2020, that is still widely trusted in some ecosystems. That makes weak governance itself an attack surface.

Reference: https://unmitigatedrisk.com/?p=850

Root governance failures

We need risk-aware, continuous root governance:

- Blast radius control for low-volume CAs

- Tiered validation for high-value targets (like IP SAN certificates)

- Real CT monitoring that triggers remediation

- Cross-vendor accountability (one vendor’s trust shouldn’t be universal if others reject it)

I wrote on this governance model here: https://unmitigatedrisk.com/?p=923

A broader pattern: software and the WebPKI

This incident is not a standalone. It follows previous failures:

DigiNotar (2011, Netherlands): Compromised, issuing hundreds of rogue certificates – including for Google – that were used in live MITM surveillance against Iranian users. The CA collapsed and was distrusted by all major browsers.

Reference: https://unmitigatedrisk.com/?p=850

TÜRKTRUST (2013, Turkey): Issued an intermediate CA certificate later used to impersonate Google domains.

Reference: https://unmitigatedrisk.com/?p=850

ANSSI (2013, France): Government CA issued Google-impersonation certs. Browsers blocked them.

Microsoft code-signing trust (2025): I documented how poor governance turned Microsoft code signing into a subversion toolchain: https://unmitigatedrisk.com/?p=1085

The pattern is clear: long-lived trust, weak oversight, repeated governance failures. And notably, most of the major real-world MITM events trace back to European CAs. That history underscores how dangerous it is when regulatory frameworks like eIDAS 2.0 mandate trust regardless of technical or governance quality.

Why eIDAS 2.0 makes it worse

The EU’s provisional agreement on eIDAS 2.0 (November 2023) mandates that browsers must trust CAs approved by EU member states, issuing QWACs (Qualified Website Authentication Certificates).

References:

- EU text: https://www.europarl.europa.eu/cmsdata/278103/eIDAS-4th-column-extract.pdf

- My notes on this: https://docs.google.com/document/d/1sGzaE9QTs-qorr4BTqKAe0AaGKjt5GagyEevDoavWU0/

This comes as EV certificates (part of the “OV” slice) have declined to only ~4% of issuance as tracked on https://merkle.town (OV includes EV in their charts). EV failed because it misled users. The Stripe-name example shows that easily: https://arstechnica.com/information-technology/2017/12/nope-this-isnt-the-https-validated-stripe-website-you-think-it-is/

Browsers are negotiating with the EU to separate QWAC trust from normal trust. For now, however, eIDAS still forces browsers to trust potentially weak or misbehaving CAs. This repeats the Microsoft problem but now globally and legislatively enforced.

And the oversight problem runs deeper. Under eIDAS, Conformity Assessment Bodies (CABs) are responsible for auditing and certifying qualified trust service providers. Auditors should have caught systemic mis-issuance like the 1.1.1.1 case, and so should CABs. Yet in practice many CABs are general IT auditors with limited PKI depth. If they miss problems, the entire EU framework ends up institutionalizing weak governance rather than correcting it.

A parallel exists in other ecosystems: under Entrust’s audits, roughly 3% of issued certificates are supposed to be reviewed. Because CAs select the audit samples, in theory one could envision a CA trying to hide bad practices. But the more likely situation is that auditors simply missed it. With enough audits over time, all but deliberate concealment should have brought at least one of these mis-issued certificates into scope. That points to an auditing and CAB oversight gap, not just a rogue CA.

The bigger picture

A few CAs dominate WebPKI. The long tail, though small, can create massive risk if trusted. Microsoft’s root store is broader and more passive than others. The critical issue is the number of trusted organizations, each representing a potential point of failure. Microsoft’s own list of Trusted Root Program participants includes well over one hundred CAs from dozens of countries, creating a vast and difficult-to-audit trust surface.

Reference: https://ccadb-public.secure.force.com/microsoft/IncludedCACertificateReportForMSFT

Forced or passive trust is a recipe for systemic risk.

Microsoft’s root store decisions expose its users to risks that Chrome, Firefox, and Safari users are shielded from. Yet Microsoft participates minimally in WebPKI governance. If the company wants to keep a broader and more permissive root store, it should fund the work needed to oversee it – staffing and empowering a credible root program, actively participating in CA/Browser Forum policy, and automating the monitoring Certificate Transparency logs for misuse. Without that investment, Microsoft is effectively subsidizing systemic risk for the rest of the web.

Thanks to Alex Radocea for double-checking DoH/DoT client behavior in support of this post.