There has been a ton of chatter on the internet the last few days about this MSRC incident; it is an example of so many things gone wrong it’s just not funny anymore.

In this post I wanted to document the aspects of this issue as it relates to the Terminal Services Licensing protocol.

Let’s start with the background story; Microsoft introduced a licensing mechanism for Terminal Services that they used to enforce their Client Access Licenses (CAL) for the product. The protocol used in this mechanism is defined in [MS-RDPELE].

A quick review of this document shows the model goes something like this:

- Microsoft operates a X.509 Certificate Authority (CA).

- Enterprise customers who use Terminal Services also operate a CA; the terminal services team however calls it a “License server”.

- Every Enterprise “License server” is made a subordinate of the Microsoft Issuing CA.

- The license server issues “Client Access Licenses (CALs)” or aka X.509 certificates.

By itself this isn’t actually a bad design, short(ish) lived certificates are used so the enterprise has to stay current on its licenses, it uses a bunch of existing technology so that it can be quickly developed and its performance characteristics are known.

The devil however is in the details; let’s list a few of those details:

- The Issuing CA is made a subordinate of one of the “Microsoft Root CAs”.

- The Policy CA (“Microsoft Enforced Licensing Intermediate PCA”) on the Microsoft side was issued “Dec 10 01:55:35 2009 GMT”

- All of the certificates in the chain were signed using RSAwithMD5.

- The CAs were likely a standard Microsoft Certificate Authority (The certificate was issued with a Template Name of “SubCA”)

- The CAs were not using random serial numbers (its serial number of one was 7038)

- The certificates the license server issued were good for :

- License Server Verification (1.3.6.1.4.1.311.10.6.2)

- Key Pack Licenses (1.3.6.1.4.1.311.10.6.1)

- Code Signing (1.3.6.1.5.5.7.3.3)

- There were no name constraints in the “License server” certificate restricting the names that it could make assertions about.

- The URLs referred to in the certificates were invalid (URLs to CRLs and issuer certificates) and may have been for a long time (looks like they may not have been published since 2009).

OK, let’s explore #1 for a moment; they needed a Root CA they controlled to ensure they could prevent people from minting their own licenses. They probably decided to re-use the product Root Certificate Authority so that they could re-use the CertVerifiyCertificateChainPolicy and its CERT_CHAIN_POLICY_MICROSOFT_ROOT policy. No one likes maintaining an array of hashes that you have to keep up to date on all the machines doing the verification. This decision also meant that they could have those who operated the existing CAs manage this new one for them.

This is was also the largest mistake they made, the decision to operationally have this CA chained under the production product roots made this system an attack vector for the bad guys. It made it more valuable than any third-party CA.

They made this problem worse by having every license server be a subordinate of this CA; these license servers were storing their keys in software. The only thing protecting them is DPAPI and that’s not much protection.

They made the problem even worse by making each of those CAs capable of issuing code signing certificates.

That’s right, every single enterprise user of Microsoft Terminal Services on the planet had a CA key and certificate that could issue as many code signing certificates they wanted and for any name they wanted – yes even the Microsoft name!

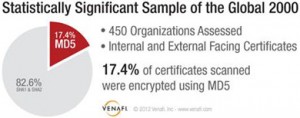

It doesn’t end there though, the certificates were signed using RSAwithMD5 the problem is that MD5 has been known to be susceptible to collision attacks for a long time and they were found to be practical by December 2008 – before the CA was made.

Also these certificates were likely issued using the Microsoft Server 2003 Certificate Authority (remember this was 2009) as I believe that the Server 2008 CA used random serial numbers by default (I am not positive though – it’s been a while).

It also seems based on the file names in the certificates AIAs that the CRLs had not been updated since the CA went live and maybe were never even published, this signals that this was not being managed at all since then.

Then there is the fact that even though Name Constraints was both supported by the Windows platform at the time this solution was created they chose not to include them in these certificates – doing so would have further restricted the value to the attacker.

And finally there is the fact that MD5 has been prohibited from use in Root Programs for some time, the Microsoft Root Program required third-party CAs to not to have valid certificates that used MD5 after January 15, 2009. The CA/Browser Forum has been a bit more lenient in the Baseline Requirements by allowing their use until December 2010. Now it’s fair to say the Microsoft Roots are not “members” of the root program but one would think they would have met the same requirements.

So how did all of this happen, I don’t know honestly.

The Security Development Lifecycle (SDL) used at Microsoft was in full force in 2008 when this appears to have been put together; it should have been caught via that process, it should have been caught by a number of other checks and balances also.

It wasn’t though and here we are.

P.S. Thanks to Brad Hill, Marsh Ray and Nico Williams for chatting about this today 🙂

Additional Resources

Microsoft Update and The Nightmare Scenario

Microsoft Certificate Was Used to Sign “Flame” Malware

How old is Flame

Security Advisory 2718704: Update to Phased Mitigation Strategy