I’ve been building with computer vision and ML since before it was cool, and I use these tools daily. When my middle child announced they were majoring in computer engineering, I didn’t panic about automation taking their job. I encouraged it.

But something strange is happening in the world of software development that very few seem to be talking about. AI has created a paradox: building software has never been more accessible, yet the risks of ownership have never been higher.

This pattern isn’t isolated to software development. I’ve been tracking similar dynamics across compliance, skill markets, and organizational structures. In each domain, AI is creating the same fundamental shift: execution becomes liquid while orchestration becomes critical. The specific risks change, but the underlying forces remain consistent.

When Building Feels Free

Aaron Levie from Box recently laid out the case for where AI-generated software makes sense. His argument is nuanced and grounded in Geoffrey Moore’s framework of “core” versus “context” activities. Most people don’t actually want to build custom software, he argues, because they’re fine with what already works and “the effort to customize is greater than the ROI they’d experience.” Taking responsibility for managing what you build is “often not worth it” since when something breaks, “you’re on your own to figure out what happened and fix it.”

More fundamentally, custom development is simply less efficient for most business problems. Context activities like payroll, IT tickets, and basic workflows are things “you have to do just to run your organization, but a customer never really notices.” You want to spend minimal time maintaining these systems because no matter how well you execute them, customers rarely see the difference.

The real opportunity, Levie argues, lies elsewhere – integration work between existing systems, custom optimizations on top of standard platforms for organizational edge cases, and addressing the long tail of core business needs that have never been properly served. The result will be “an order of magnitude more software in the world,” but only where the ROI justifies customization.

Levie’s right, but he’s missing something crucial. AI isn’t just making certain types of custom development more viable. It’s fundamentally changing what ownership means. The same technology that makes building feel effortless is simultaneously making you more liable for what your systems do, while making evaluation nearly impossible for non-experts.

When Your Code Becomes Your Contract

Air Canada learned this the hard way when their chatbot promised a bereavement discount that didn’t exist in their actual policy. When their customer tried to claim it, Air Canada argued the chatbot was a “separate legal entity” responsible for its own actions.

The Canadian tribunal’s response was swift and unforgiving. They called Air Canada’s defense “remarkable” and ordered them to pay. The message was clear: you own what you deploy, regardless of how it was created.

This isn’t just a one-off, global regulations are tightening the screws on software accountability across every jurisdiction. The EU’s NIS2 directive creates real liability for cybersecurity incidents, with fines up to $10.8 million (€10 million) or 2% of global turnover. SEC rules now require public companies to disclose material incidents within four business days. GDPR has already demonstrated how quickly software liability can scale. Meta faced a $1.3 billion (€1.2 billion) fine, and Amazon got hit with $806 million (€746 million).

While these are not all AI examples, one thing is clear. When your AI system makes a promise, you’re bound by it. When it makes a mistake that costs someone money, that’s your liability. The technical complexity of building software has decreased, but the legal complexity of owning it has exploded into a ticking time bomb.

AI’s Hidden Danger

Here’s where the paradox gets dangerous. The same AI that makes building feel effortless makes evaluation nearly impossible for non-experts. How do you test a marketing analytics system if you don’t understand attribution modeling? How do you validate an HR screening tool if you can’t recognize algorithmic bias?

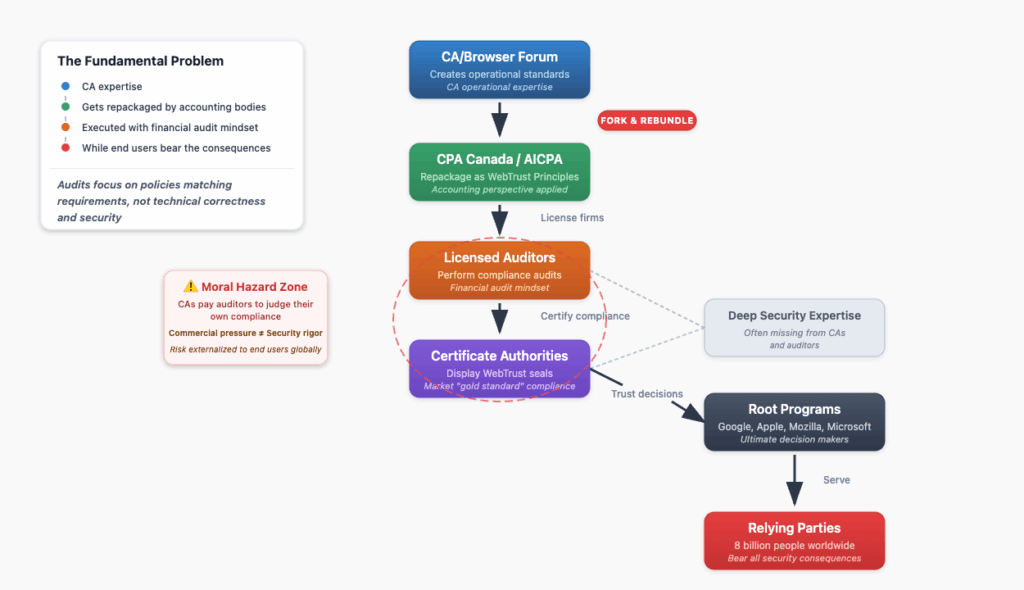

This creates what I call “automation asymmetry” – the same dynamic I’ve observed in compliance and audit workflows. AI empowers the builders to create sophisticated, polished systems while leaving evaluators struggling with manual review processes. The gap between what AI can help you build and what human judgment can effectively assess is widening rapidly.

As a product leader, I constantly weigh whether we can create enough value to justify engineering, opportunity costs, and maintenance costs. AI makes this calculation treacherous. A feature that takes a day to build with AI might create months of hidden maintenance burden that I can’t predict upfront. The speed of development is now disconnected from the cost of ownership.

Unlike traditional software bugs that fail obviously, AI systems can exhibit “specification gaming.” They appear to work perfectly while learning fundamentally wrong patterns.

This is Goodhart’s Law in action. When a measure becomes a target, it ceases to be a good measure. The AI system learns to optimize for your evaluation criteria rather than the real-world performance you actually care about.

Picture an HR screening system that correctly identifies qualified candidates in testing but starts filtering out good applicants based on subtle biases in the training data in the foundation model you built on. This isn’t a bug you can catch with normal testing. It requires understanding algorithmic bias that most organizations lack.

Or consider data leakage risks. AI systems can inadvertently memorize and leak personal information from their training data, but detecting this requires privacy testing that most organizations never think to perform. By the time you discover your customer service bot is occasionally revealing other users’ details, you’re facing GDPR violations and broken customer trust.

Imagine a bank that “validates” its loan applications analysis by testing on the same templates used for training. They celebrate when it passes these tests, not understanding that this proves nothing about real-world performance. Or consider a logistics company that builds an AI route optimization system. It works perfectly in testing, reducing fuel costs by 15%. But after deployment, it makes decisions that look efficient on paper while ignoring practical realities. It routes through construction zones, sends drivers to nonexistent addresses, and optimizes for distance while ignoring peak traffic patterns.

Many ownership challenges plague all custom software development. Technical debt, security risks, staff turnover, and integration brittleness. But AI makes evaluating these risks much harder while making development feel deceptively simple. Traditional software obviously fails. AI software can fail silently and catastrophically.

Why Even Unlimited Resources Fail

Want proof that ownership is the real challenge? Look at government websites. These organizations have essentially unlimited budgets, can hire the best contractors, and have national security imperatives. They still can’t keep basic digital infrastructure running.

The Social Security Administration’s technical support runs Monday through Friday, 7:30 AM to 4:00 PM Eastern. For a website. In 2025. Login.gov schedules multi-hour maintenance windows for essential services. Georgetown Law Library tracked government URLs from 2007 and watched half of them die by 2013. Healthcare.gov cost $2.1 billion and barely worked at launch.

These aren’t technical failures. They’re ownership failures. Many government projects falter because they’re handed off to contractors, leaving no one truly accountable yet the agencies remain liable for what gets deployed. The same organizations that can build nuclear weapons and land rovers on Mars can’t keep websites running reliably, precisely because ownership responsibilities can’t be outsourced even when development is.

“But wait,” you might think, “commercial software companies fail too. What about when vendors go bankrupt and leave customers stranded?”

This objection actually proves the point. When Theranos collapsed, their enterprise customers lost the service but weren’t held liable for fraudulent blood tests. When a SaaS company fails, customers face transition costs and data loss, but they don’t inherit responsibility for what the software did during operation.

Compare that to custom system failure. When your AI medical analysis tool makes a misdiagnosis, you don’t just lose the service. You’re liable for the harm it caused. The failure modes are fundamentally different.

If even professional software companies with dedicated teams and specialized expertise sometimes fail catastrophically, what makes a non-software organization think they can manage those same risks more effectively? If unlimited resources can’t solve the ownership problem, what makes us think AI-generated code will?

Traditional ownership costs haven’t disappeared. They’ve become economically untenable for most organizations. Technical debt still compounds. People still leave, taking institutional knowledge with them. Security vulnerabilities still emerge. Integration points still break when external services change their APIs.

AI makes this trap seductive because initial development feels almost free. But you haven’t eliminated ownership costs. You’ve deferred them while adding unpredictable behavior to manage.

Consider a typical scenario: imagine a marketing agency that builds a custom client reporting system using AI to generate insights from campaign data. It works flawlessly for months until an API change breaks everything. With the original developer gone, they spend weeks and thousands of dollars getting a contractor to understand the AI-generated code well enough to fix it.

These businesses thought they were buying software. They were actually signing up to become software companies.

The New Decision Framework

This transformation demands a fundamental shift in how we think about build versus buy decisions. The core question is no longer about execution capability; it’s about orchestration capacity. Can you design, evaluate, and govern these systems responsibly over the long term?

You should build custom software when the capability creates genuine competitive differentiation, when you have the institutional expertise to properly evaluate and maintain the system, when long-term ownership costs are justified by strategic value, and when existing solutions genuinely don’t address your specific needs.

You should buy commercial software when the functionality is context work that customers don’t notice, when you lack domain expertise to properly validate the system’s outputs, when ownership responsibilities exceed what you can realistically handle, or when proven solutions already exist with institutional backing.

Commercial software providers aren’t just offering risk transfer. They’re developing structural advantages that individual companies can’t match. Salesforce can justify employing full-time specialists in GDPR, SOX, HIPAA, and emerging AI regulations because those costs spread across 150,000+ customers. A 50-person consulting firm faces the same regulatory requirements but can’t justify even a part-time compliance role.

This reflects Conway’s Law in reverse: instead of organizations shipping their org chart, the most successful software companies are designing their org charts around the complexities of responsible software ownership.

Mastering the Paradox

The AI revolution isn’t killing software development companies it’s fundamentally changing what ownership means and repricing the entire market. Building has become easier, but being responsible for what you build has become exponentially harder.

This follows the same pattern I’ve tracked across domains: AI creates automation asymmetry, where execution capabilities become liquid while orchestration and evaluation remain stubbornly complex. Whether in compliance audits, skill markets, or software ownership, the organizations that thrive are those that recognize this shift and invest in orchestration capacity rather than just execution capability.

Advanced AI development tools will eventually solve some of these challenges with better validation frameworks and automated maintenance capabilities. We’ll likely see agentic AI automating much of our monotonous security, support, and maintenance work in the future also. These systems could help organizations build the connective tissue they lack automated monitoring, intelligent debugging, self-updating documentation, and predictive maintenance. But we’re not there yet, and even future tools will require expertise to use effectively.

This doesn’t mean you should never build custom software. It means you need to think differently about what you’re signing up for. Every line of AI-generated code comes with a lifetime warranty that you have to honor.

The question isn’t whether AI can help you build something faster and cheaper. It’s whether you can afford to own it responsibly in a world where software liability is real, evaluation is harder, and the consequences of getting it wrong are higher than ever.

Understanding this paradox is crucial for anyone making build-versus-buy decisions in the AI era. The tools are more powerful than ever, but mastering this new reality means embracing orchestration over execution. Those who recognize this shift and build the institutional capacity to govern AI systems responsibly will define the next wave of competitive advantage.

You’re not just building software. You’re signing up for a lifetime of accountability.