Compliance is a vital sign of organizational health. When it trends the wrong way, it signals deeper problems: processes that can’t be reproduced, controls that exist only on paper, drift accumulating quietly until trust evaporates all at once.

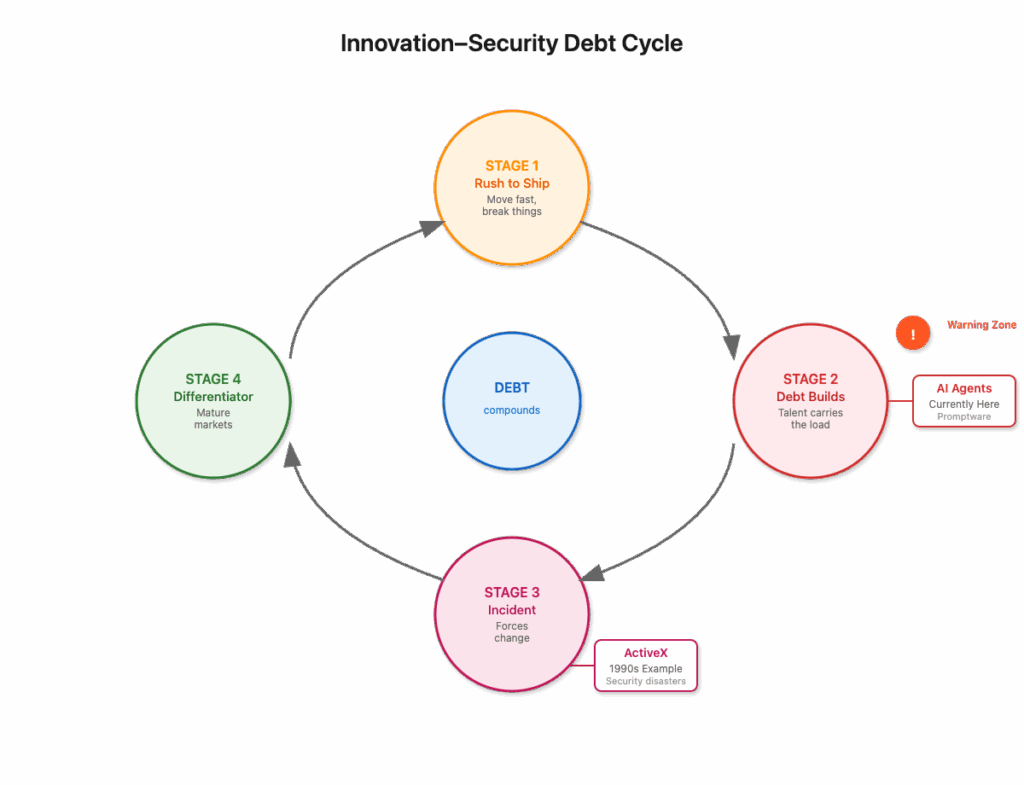

The pattern is predictable. Gradual decay, ignored signals, sudden collapse. Different industries, different frameworks, same structural outcome. (I wrote about this pattern here.)

But something changed. AI is rewriting how software gets built, and compliance hasn’t kept up.

Satya Nadella recently said that as much as 30% of Microsoft’s production code is now written by AI. Sundar Pichai put Google’s number in the same range. These aren’t marketing exaggerations; they mark a structural change in how software gets built.

Developers no longer spend their days typing every line. They spend them steering, reviewing, and debugging. AI fills in the patterns, and the humans decide what matters. The baseline of productivity has shifted.

Compliance has not. Its rhythms remain tied to quarterly reviews, annual audits, static documents, and ritualized fire drills. Software races forward at machine speed while compliance plods at audit speed. That mismatch isn’t just inefficient. It guarantees drift, brittleness, and the illusion that collapse comes without warning.

If compliance is the vital sign, how do you measure it at the speed of code?

What follows is not a description of today’s compliance tools. It’s a vision for where compliance infrastructure needs to go. The technology exists. The patterns are proven in adjacent domains. What’s missing is integration. This is the system compliance needs to become.

The Velocity Mismatch

The old world of software was already hard on compliance. Humans writing code line by line could outpace annual audits easily enough. The new world makes the mismatch terminal.

If a third of all production code at the largest software companies is now AI-written, then code volume, change velocity, and dependency churn have all exploded. Modern development operates in hours and minutes, not quarters and years.

Compliance, by contrast, still moves at the speed of filing cabinets. Controls are cross-referenced manually. Policies live in static documents. Audits happen long after the fact, by which point the patient has either recovered or died. By the time anyone checks, the system has already changed again.

Drift follows. Exceptions pile up quietly. Compensating controls are scribbled into risk registers. Documentation diverges from practice. On paper, everything looks fine. In reality, the brakes don’t match the car.

It’s like running a Formula 1 car with horse cart brakes. You might get a few laps in. The car will move, and at first nothing looks wrong. But eventually the brakes fail, and when they do the crash looks sudden. The truth is that failure was inevitable from the moment someone strapped cart parts onto a race car.

Compliance today is a system designed for the pace of yesterday, now yoked to the speed of code. Drift isn’t a bug. It’s baked into the mismatch.

The Integration Gap

Compliance breaks at the integration point. When policies live in Confluence and code lives in version control, drift isn’t a defect. It’s physics. Disconnected systems diverge.

The gap between documentation and reality is where compliance becomes theater. PDFs can claim controls exist while repos tell a different story.

Annual audits sample: pull some code, check some logs, verify some procedures. Sampling only tells you what was true that instant, not whether controls remain in place tomorrow or were there yesterday before auditors arrived.

Eliminate the gap entirely.

Policies as Code

Version control becomes the shared foundation for both code and compliance.

Policies, procedures, runbooks, and playbooks become versioned artifacts in the same system where code lives. Not PDFs stored in SharePoint. Not wiki pages anyone can edit without review. Markdown files in repositories, reviewed through pull requests, with approval workflows and change history. Governance without version control is theater.

When a policy changes, you see the diff. When someone proposes an exception (a documented deviation from policy), it’s a commit with a reviewer. When an auditor asks for the access control policy that was in effect six months ago, you check it out from the repo. The audit trail is the git history. Reproducibility by construction.

Governance artifacts get the same discipline as code. Policies go through PR review. Changes require approvals from designated owners. Every modification is logged, attributed, and traceable. You can’t silently edit the past.

Once policies live in version control, compliance checks run against them automatically. Code and configuration changes get checked against the current policy state as they happen. Not quarterly, not at audit time, but at pull request time.

When policy changes, you immediately see what’s now out of compliance. New PCI requirement lands? The system diffs the old policy against the new one, scans your infrastructure, and surfaces what needs updating. Gap analysis becomes continuous, not an annual fire drill that takes two months and produces a 60-page spreadsheet no one reads.

Risk acceptance becomes explicit and tracked. Not every violation is blocking, but every violation is visible. “We’re accepting this S3 bucket configuration until Q3 migration” becomes a tracked decision in the repo with an owner, an expiration date, and compensating controls. The weighted risk model has teeth because the risk decisions themselves are versioned and auditable.

Monitoring Both Sides of the Gap

Governance requirements evolve. Frameworks update. If you’re not watching, surprises arrive weeks before an audit.

Organizations treat this as inevitable, scrambling when SOC 2 adds trust service criteria or PCI-DSS publishes a new version. The fire drill becomes routine.

But these changes are public. Machines can monitor for updates, parse the diffs, and surface what shifted. Auditors bring surprises. Machines should not.

Combine external monitoring with internal monitoring and you close the loop. When a new requirement lands, you immediately see its impact on your actual code and configuration.

SOC 2 adds a requirement for encryption key rotation every 90 days? The system scans your infrastructure, identifies 12 services that rotate keys annually, and surfaces the gap months ahead. You have time to plan, size the effort, build it into the roadmap.

This transforms compliance from reactive to predictive. You see requirements as they emerge and measure their impact before they become mandatory. The planning horizon extends from weeks to quarters.

From Vibe Coding to Vibe Compliance

Developers have already adapted to AI-augmented work. They call it “vibe coding.” The AI fills in the routine structures and syntax while humans focus on steering, debugging edge cases, and deciding what matters. The job shifted from writing every line to shaping direction. The work moved from typing to choosing.

Compliance will follow the same curve. The rote work gets automated. Mapping requirements across frameworks, checklist validations, evidence collection. AI reads the policy docs, scans the codebase, flags the gaps, suggests remediations. What remains for humans is judgment: Is this evidence meaningful? Is this control reproducible? Is this risk acceptable given these compensating controls?

This doesn’t eliminate compliance professionals any more than AI eliminated engineers. It makes them more valuable. Freed from clerical box-checking, they become what they should have been all along: stewards of resilience rather than producers of audit artifacts.

The output changes too. The goal is no longer just producing an audit report to wave at procurement. The goal is producing telemetry showing whether the organization is actually healthy, whether controls are reproducible, whether drift is accumulating.

Continuous Verification

What does compliance infrastructure look like when it matches the speed of code?

A bot comments on pull requests. A developer changes an AWS IAM policy. Before the PR merges, an automated check runs: does this comply with the principle of least privilege defined in access-control.md? Does it match the approved exception for the analytics service? If not, the PR is flagged. The feedback is immediate, contextual, and actionable.

Deployment gates check compliance before code ships. A service tries to deploy without the required logging configuration. The pipeline fails with a clear message: “This deployment violates audit-logging-policy.md section 3.1. Either add structured logging or file an exception in exceptions/logging-exception-2025-q4.md.”

Dashboards update in real time, not once per quarter. Compliance posture is visible continuously. When drift occurs (when someone disables MFA on a privileged account, or when a certificate approaches expiration without renewal) it shows up immediately, not six months later during an audit.

Weighted risk with explicit compensating controls. Not binary red/green status, but a spectrum: fully compliant, compliant with approved exceptions, non-compliant with compensating controls and documented risk acceptance, non-compliant without mitigation. Boards see the shades of fragility. Practitioners see the specifics. Everyone works from the same signal, rendered at the right level of abstraction.

The Maturity Path

Organizations don’t arrive at this state overnight. Most are still at Stage 1 or earlier, treating governance as static documents disconnected from their systems. The path forward has clear stages:

Stage 1: Baseline. Get policies, procedures, and runbooks into version-controlled repositories. Establish them as ground truth. Stop treating governance as static PDFs. This is where most organizations need to start.

Stage 2: Drift Detection. Automated checks flag when code and configuration diverge from policy. The checks run on-demand or on a schedule. Dashboards show gaps in real time. Compliance teams can see drift as it happens instead of discovering it during an audit. The feedback loop shrinks from months to days. Some organizations have built parts of this, but comprehensive drift detection remains rare.

Stage 3: Integration. Compliance checks move into the developer workflow. Bots comment on pull requests. Deployment pipelines run policy checks before shipping. The feedback loop shrinks from days to minutes. Developers see policy violations in context, in their tools, while changes are still cheap to fix. This is where the technology exists but adoption is still emerging.

Stage 4: Regulatory Watch. The system monitors upstream changes: new SOC 2 criteria, updated PCI-DSS requirements, revised GDPR guidance. When frameworks change, the system diffs the old version against the new, identifies affected controls, maps them to your current policies and infrastructure, and calculates impact. You see the size of the work, the affected systems, and the timeline before it becomes mandatory. Organizations stop firefighting and start planning quarters ahead. This capability is largely aspirational today.

Stage 5: Enforcement. Policies tie directly to what can deploy. Non-compliant changes require explicit exception approval. Risk acceptance decisions are versioned, tracked, and time-bound. The system makes the right path the easy path. Doing the wrong thing is still possible (you can always override) but the override itself becomes evidence, logged and auditable. Few organizations operate at this level today.

This isn’t about replacing human judgment with automation. It’s about making judgment cheaper to exercise. At Stage 1, compliance professionals spend most of their time hunting down evidence. At Stage 5, evidence collection is automatic, and professionals spend their time on the judgment calls: should we accept this risk? Is this compensating control sufficient? Is this policy still appropriate given how the system evolved?

The Objections

There are objections. The most common is that AI hallucinates, so how can you trust it with compliance?

Fair question. Naive AI hallucinates. But humans do too. They misread policies, miss violations, get tired, and skip steps. The compliance professional who spent eight hours mapping requirements across frameworks before lunch makes mistakes in hour nine.

Structured AI with proper constraints works differently. Give it explicit sources, defined schemas, and clear validation rules, and it performs rote work more reliably than most humans. Not because it’s smarter, but because it doesn’t get tired, doesn’t take shortcuts, and checks every line the same way every time.

The bot that flags policy violations isn’t doing unconstrained text generation. It’s diffing your code against a policy document that lives in your repo, following explicit rules, and showing its work: “This violates security-policy.md line 47, committed by [email protected] on 2025-03-15.” That isn’t hallucination. That’s reproducible evidence.

And it scales in ways humans never can. The human compliance team can review 50 pull requests a week if they’re fast. The bot reviews 500. When a new framework requirement drops, the human team takes weeks to manually map old requirements against new ones. The bot does it in minutes.

This isn’t about replacing human judgment. It’s about freeing humans from the rote work where structured AI performs better. Humans hallucinate on routine tasks. Machines don’t. Let machines do what they’re good at so humans can focus on what they’re good at: the judgment calls that actually matter.

The second objection is that tools can’t fix culture. Also true. But tools can make cultural decay visible earlier. They can force uncomfortable truths into the open.

When policies live in repos and compliance checks run on every PR, leadership can’t hide behind dashboards. If the policies say one thing and the code does another, the diff is public. If exceptions are piling up faster than they’re closed, the commit history shows it. If risk acceptance decisions keep getting extended quarter after quarter, the git log is evidence.

The system doesn’t fix culture, but it makes lying harder. Drift becomes visible in real time instead of hiding until audit season. Leaders who want to ignore compliance still can, but they have to do so explicitly, in writing, with attribution. That changes the incentive structure.

Culture won’t be saved by software. But it can’t be saved without seeing what’s real. Telemetry is the prerequisite for accountability.

The Bootstrapping Problem

If organizations are already decaying, if incentives are misaligned and compliance is already theater, how do they adopt this system?

Meet people where they are. Embed compliance in the tools developers already use.

Start with a bot that comments on pull requests. Pick one high-signal policy (the one that came up in the last audit, or the one that keeps getting violated). Write it in Markdown, commit it to a repo, add a simple check that flags violations in PRs. Feedback lands in the PR, where people already work.

This creates immediate value. Faster feedback. Issues caught before they ship. Less time in post-deployment remediation. The bot becomes useful, not bureaucratic overhead.

Once developers see value, expand coverage. Add more policies. Integrate more checks. Build the dashboard that shows posture in real time. Start with the point of maximum pain: the gap between what policies say and what code does.

Make the right thing easier than the wrong thing. That’s how you break equilibrium. Infrastructure change leads culture, not the other way around.

Flipping the Incentive Structure

Continuous compliance telemetry creates opportunities to flip the incentive structure.

The incentive problem is well-known. Corner-cutters get rewarded with velocity and lower costs. The people who invest in resilience pay the price in overhead and friction. By the time the bill comes due, the corner-cutters have moved on.

What if good compliance became economically advantageous in real time, not just insurance against future collapse?

Real-time, auditable telemetry makes compliance visible in ways annual reports never can. A cyber insurer can consume your compliance posture continuously instead of relying on a point-in-time questionnaire. Organizations that maintain strong controls get lower premiums. Rates adjust dynamically based on drift. Offer visibility into the metrics that matter and get buy-down points in return.

Customer due diligence changes shape. Vendor risk assessments that take weeks and rely on stale SOC 2 reports become real-time visibility into current compliance posture. Procurement accelerates. Contract cycles compress. Organizations that can demonstrate continuous control have competitive advantage.

Auditors spend less time collecting evidence and more time evaluating controls. When continuous compliance is demonstrable, scope reduces, costs drop, cycles shorten.

Partner onboarding that used to require months of back-and-forth security reviews happens faster when telemetry is already available. Certifications and integrations move at the speed of verification, not documentation.

The incentive structure inverts. Organizations that build continuous compliance infrastructure get rewarded immediately: lower insurance costs, faster sales cycles, reduced audit expense, easier partnerships. The people who maintain strong controls see economic benefit now, not just avoided pain later.

This is how you fix the incentive problem at scale. Make good compliance economically rational today.

The Choice Ahead

AI has already made coding a collaboration between people and machines. Compliance is next.

The routine work will become automated, fast, and good enough for the basics. That change is inevitable. The real question is what we do with the time it frees up.

Stop there, and compliance becomes theater with better graphics. Dashboards that look impressive but still tell you little about resilience.

Go further, and compliance becomes what it should have been all along: telemetry about reproducibility. A vital sign of whether the organization can sustain discipline when it matters. An early warning system that makes collapse look gradual instead of sudden.

If compliance was the vital sign of organizational decay, then this is the operating system that measures it at the speed of code.

The frameworks aren’t broken. The incentives are. The rhythms are. The integration is.

The technology to build this system exists. Version control is mature. CI/CD pipelines are ubiquitous. AI can parse policies and scan code. What’s missing is stitching the pieces together and treating compliance like production.

Compliance will change. The only question is whether it catches up to code or keeps trailing it until collapse looks sudden.