PKCS#12 is the defacto file format for moving private keys and certificates around. It was defined by RSA and Microsoft in the late 90s and is used by Windows extensively. It was also recently added to KIMP as a means to export key material.

As an older format, it was designed with support for algorithms like MD2, MD5, SHA1, RC2, RC4, DES and 3DES. It was recently standardized by IETF RFC 7292 and the IETF took this opportunity to add support for SHA2 but have not made an accommodation for any mode of AES.

Thankfully PKCS #12 is based on CMS which does support AES (see RFC 3565 and RFC 5959). In theory, even though RFC 7292 doesn’t specify a need to support AES, there is enough information to use it in an interoperable way.

Another standard used by PKCS#12 is PKCS #5 (RFC 2898), this specifies the mechanics of password-based encryption. It provides two ways to do this PBES1 and PBES2, more on this later.

Despite these complexities and constraints, we wanted to see if we could provide a secure and interoperable implementation of PKCS#12 in PKIjs since it is one of the most requested features. This post documents our findings.

Observations

Lots of unused options

PKCS#12 is the swiss army knife of certificate and key transport. It can carry keys, certificates, CRLs and other metadata. The specification offers both password and certificate-based schemes for privacy and integrity protection.

This is accomplished by having an outer “integrity envelope” that may contain many “privacy envelopes”.

When using certificates to protect the integrity of its contents, the specification uses the CMS SignedData message to represent this envelope.

When using passwords it uses an HMAC placed into its own data structure.

The use of an outer envelope containing many different inner envelopes enables the implementor to mix and match various types of protection approaches into a single file. It also enables implementers to use different secrets for integrity and privacy protection.

Despite this flexibility, most implementations only support the following combination:

- Optionally using the HMAC mechanism for integrity protection of certificates

- Using password-based privacy protection for keys

- Using the same password for privacy protection of keys

NOTE: OpenSSL was the only implementation we found that supports the ability to use a different password for the “integrity envelope” and “privacy envelope”. This is done using the “twopass” option of the pkcs12 command.

The formats flexibility is great. We can envision a few different types of scenarios one might be able to create with it, but there appears to be no documented profile making it clear what is necessary to interoperate with other implementations.

It would be ideal if a future incarnation of the specification provided this information for implementers.

Consequences of legacy

As mentioned earlier there are two approaches for password based encryption defined in PKCS#5 (RFC 2989).

The first is called PBES1, according to the RFC :

PBES1 is recommended only for compatibility with existing

applications, since it supports only two underlying encryption

schemes, each of which has a key size (56 or 64 bits) that may not be

large enough for some applications.

The PKCS#12 RFC reinforces this by saying:

The procedures and algorithms

defined in PKCS #5 v2.1 should be used instead.

Specifically, PBES2 should be used as encryption scheme, with PBKDF2

as the key derivation function.

With that said it seems, there is no indication in the format on which scheme is used which leaves an implementor forced with trying both options to “just see which one works”.

Additionally it seems none of the implementations we have encountered followed this advice and only support the PBES1 approach.

Cryptographic strength

When choosing cryptographic algorithm one of the things you need to be mindful of is the minimum effective strength. There are differing opinions on what each algorithm’s minimum effective strength is at different key lengths, but normally the difference not significant. These opinions also change as computing power increases along with our ability to break different algorithms.

When choosing which algorithms to use together you want to make sure all algorithms you use in the construction offer similar security properties If you do not do this the weakest algorithm is the weakest link in the construction. It seems there is no guidance in the standard for topic, as a result we have found:

- Files that use weak algorithms protection of strong keys,

- Files that use a weaker algorithm for integrity than they do for privacy.

This first point is particularly important. The most recently available guidance for minimum effective key length comes from the German Federal Office for Information Security, BSI. These guidelines, when interpreted in the context of PKCS #12, recommend that a 2048-bit RSA key protection happens using SHA2-256 and a 128-bit symmetric key.

The strongest symmetric algorithm specified in the PKCS #12 standard is 3DES which only offers an effective strength of 112-bits. This means, if you believe the BSI, it is not possible to create a standards compliant PKCS#12 that offer the effective security necessary to protect a 2048-bit RSA key.

ANSI on the other hand, currently recommends that 100-bit symmetric key is an acceptable minimum strength to protect a 2048-bit RSA key (though this recommendation was made a year earlier than the BSI recommendation).

So if you believe ANSI, the strongest suite offered by RFC 7292 is strong enough to “adequately” protect such a key, just not a larger one.

The unfortunate situation we are left with in is that it is not possible to create a “standards compliant” PKCS12 that support modern cryptographic recommendations.

Implementations

OpenSSL

A while ago OpenSSL was updated to support AES-CBC in PKCS#8 which is the format that PKCS#12 uses to represent keys. In an ideal world, we would be using AES-GCM for our interoperability target but we will take what we can get.

To create such a file you would use a command similar to this:

| rmh$ openssl genrsa 2048|openssl pkcs8 -topk8 -v2 aes-256-cbc -out key.pem

Generating RSA private key, 2048 bit long modulus

……………+++

……………….+++

e is 65537 (0x10001)

Enter Encryption Password:

Verifying – Enter Encryption Password:

rmh$ cat key.pem

—–BEGIN ENCRYPTED PRIVATE KEY—–

…

…

…

—–END ENCRYPTED PRIVATE KEY—– |

If you look at the resulting file with an ASN.1 parser you will see the file says the Key Encryption Key (KEK) is aes-256-cbc.

It seems the latest OpenSSL (1.0.2d) will even let us do this with a PKCS#12, those commands would look something like this:

| openssl genrsa 2048|openssl pkcs8 -topk8 -v2 aes-256-cbc -out key.pem

openssl req -new -key key.pem -out test.csr

openssl x509 -req -days 365 -in test.csr -signkey key.pem -out test.cer

openssl pkcs12 -export -inkey key.pem -in test.cer -out test.p12 -certpbe AES-256-CBC -keypbe AES-256-CBC |

NOTE: If you do not specify explicitly specify the certpbe and keypbe algorithm this version defaults to using pbewithSHAAnd40BitRC2-CBC to protect the certificate and pbeWithSHAAnd3-KeyTripleDES-CBC to protect the key.

RC2 was designed in 1987 and has been considered weak for a very long time. 3DES is still considered by many to offer 112-bits of security though in 2015 it is clearly not an algorithm that should still be in use.

Since it supports it OpenSSL should really be updated to use aes-cbc-256 by default and it would be nice if support for AES-GCM was also added.

NOTE: We also noticed if you specify “-certpbe NONE and -keypbe NONE” (which we would not recommend) that OpenSSL will create a PKCS#12 that uses password-based integrity protection and no privacy protection.

Another unfortunate realization is OpenSSL uses an iteration count of 2048 when deriving a key from a password, by today’s standards is far too small.

We also noticed the OpenSSL output of the pkcs12 command does not indicate what algorithms were used to protect the key or the certificate, this may be one reason why the defaults were never changed — users simply did not notice:

| rmh$ openssl pkcs12 -in windows10_test.pfx

Enter Import Password:

MAC verified OK

Bag Attributes

localKeyID: 01 00 00 00

friendlyName: {4BC68C1A-28E3-41DA-BFDF-07EB52C5D72E}

Microsoft CSP Name: Microsoft Base Cryptographic Provider v1.0

Key Attributes

X509v3 Key Usage: 10

Enter PEM pass phrase:

Bag Attributes

localKeyID: 01 00 00 00

subject=/CN=Test/O=2/OU=1/[email protected]/C=<\xD0\xBE

issuer=/CN=Test/O=2/OU=1/[email protected]/C=<\xD0\xBE

—–BEGIN CERTIFICATE—–

…

…

—–END CERTIFICATE—– |

Windows

Unfortunately, it seems that all versions of Windows (even Windows 10) still produces PKCS #12’s using pbeWithSHAAnd3-KeyTripleDES-CBC for “privacy” of keys and privacy of certificates it uses pbeWithSHAAnd40BitRC2-CBC. It then relies on the HMAC scheme for integrity.

Additionally it seems it only supports PBES1 and not PBES2.

Windows also uses an iteration count of 2048 when deriving keys from passwords which is far too small.

It also seems unlike OpenSSL, Windows is not able to work with files produced with more secure encryption schemes and ciphers.

PKIjs

PKIjs has two, arguably conflicting goals, the first of which is to enable modern web applications to interoperate with traditional X.509 based applications. The second of which is to use modern and secure options when doing this.

WebCrypto has a similar set of guiding principles, this is why it does not support weaker algorithms like RC2, RC4 and 3DES.

Instead of bringing in javascript based implementations of these weak algorithms into PKIjs we have decided to only support the algorithms supported by webCrypto (aes-256-cbc, aes-256-gcm with SHA1 or SHA2 using PBES2.

This represents a tradeoff. The keys and certificates and exported by PKIjs will be protected with the most interoperable and secure pairing of algorithms available but the resulting files will still not work in any version of Windows (even the latest Windows 10 1511 build).

The profile of PKCS#12 PKIjs creates that will work with OpenSSL will only do so if you the -nomacver option:

| openssl pkcs12 -in pkijs_pkcs12.pfx -nomacver

|

This is because OpenSSL uses the older PBKDF1 for integrity protection and PKIjs is using the newer PBKDF2, as a result of this command integrity will not be checked on the PKCS#12.

With that caveat, here is an example of how one would generate a PKCS#12 with PKIjs.

Summary

Despite its rocky start, PKCS#12 is still arguably one of the most important cryptographic message formats. An attempt has been made to modernize it somewhat, but they did not go far enough.

It also seems OpenSSL has made an attempt to work around this gap by adding support for AES-CBC to their implementation.

Windows on the other hand still only appear to support only the older encryption construct with the weaker ciphers.

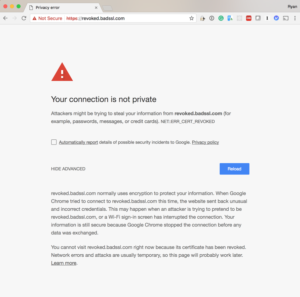

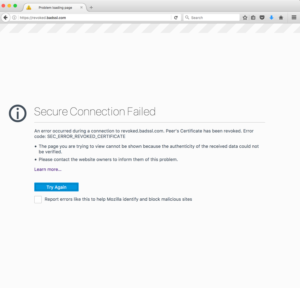

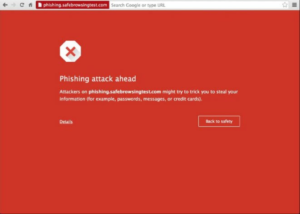

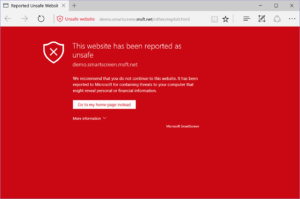

That said even when strong, modern cryptography are in use we must remember any password-based encryption scheme will only be as secure as the password that is used with it. As a proof point, consider these tools for PKCS#12 and PKCS#8 that make password cracking trivial for these formats.

We believe the storage and transport of encrypted private keys is an important enough topic that it deserves a modern and secure standard. With that in mind recommend the following changes to RFC 7292 be made:

- Deprecate the use of all weaker algorithms,

- Make it clear both AES-CBC and AES-GCM should be supported,

- Make it clear what the minimal profile of this fairly complex standard is,

- Require the support of PBES2,

- Be explicit and provide modern key stretching guidance for use with PBKDF2,

- Clarify how one uses PBMAC1 for integrity protection,

- Require that the certificates and the keys are both protected with the same or equally secure mechanisms.

As for users, there are a few things you can do to protect yourself from the associated issues discussed here, some of which include:

- Do not use passwords to protect your private keys. Instead generated symmetric keys or generated passwords of an appropriate lengths (e.g. “openssl rand -base64 32”),

- When using OpenSSL always specify which algorithms are being used when creating your PKCS#12 files and double check those are actually the algorithms being used,

- Ensure that the algorithms you are choosing to protect your keys offer a minimum effective key length equal to or greater than the keys you will protect,

- Securely delete any intermediate copies of keys or inputs to the key generation or export process.

Ryan & Yury