Part of the “AI Skill Liquidity” series

Early in my career, I was a security engineer, in this role we approached problems with a distinctive mindset. We look at a new system and immediately start threat modeling. What could go wrong? Where are the vulnerabilities? How might an attacker exploit this? Then we’d systematically build defenses, design monitoring systems, and create incident response procedures.

Later at Microsoft, I realized that good lawyers operate almost identically. They’re security engineers for text. When reviewing a contract, they’re threat modeling potential disputes. When structuring a transaction, they’re identifying legal vulnerabilities and designing defenses. When arguing a case, they’re building systems to withstand attack from opposing counsel. Of course, not all legal work requires this depth of analysis but the most valuable does.

This realization first drew me to Washington State’s legal apprenticeship program. The idea of learning legal “security engineering” through hands-on mentorship rather than accumulating law school debt appealed to me. I never pursued it, but I remained fascinated by sophisticated legal reasoning, regularly listening to Advisory Opinions and other legal podcasts where excellent legal minds dissect complex problems.

Just as I’ve written about skill liquidity transforming compliance and software development, the same forces are reshaping legal practice. AI is injecting liquidity into what was once an extremely illiquid skill market, where expertise was scarce, slowly accumulated, and tightly guarded. But here’s what’s different, current legal AI treats lawyers like document processors when they’re actually security engineers, and this fundamental misunderstanding is creating unexpected problems.

The Security Engineer’s Dilemma

Legal skills have historically been among the most illiquid in professional markets, exactly the kind of expertise scarcity that AI disruption targets. A single fabricated case citation can end careers. Imagine a security engineer whose monitoring system had a 99% accuracy rate, but the 1% false negatives included critical breaches that were mishandled so badly that the company’s reputation was irreparably damaged. Reputation operates on decades-long timescales because clients hire lawyers based on their track record of successfully defending against legal threats.

Just as I described in software development, AI injecting liquidity into skill markets creates a repricing rather than elimination. Where legal expertise was once scarce and slowly accumulated, AI makes certain capabilities more accessible while reshaping where the real value lies.

Traditional legal training worked like security engineer mentorship. Junior lawyers learned threat modeling by working on real cases under senior guidance. They’d review contracts while learning to spot potential vulnerabilities, draft briefs while understanding how opposing counsel might attack their arguments, structure deals while considering regulatory risks. Quality control and knowledge transfer happened simultaneously, seniors reviewing junior work would catch errors while teaching systematic risk assessment.

AI is disrupting this model in ways that would terrify any security team lead. Document review, research, and drafting that once provided junior lawyers with hands-on threat modeling experience are being automated. The tasks that taught pattern recognition, learning to spot the subtle contract clause that creates liability exposure, recognizing the factual detail that undermines a legal argument, are disappearing.

This creates the same middle tier squeeze I explored in software development, acute pressure between increasingly capable juniors and hyper-productive seniors. Junior lawyers become more capable with AI assistance while partners extend their span of control through AI tools, leaving mid-level associates caught in a compressed middle where their traditional role as the “throughput engine” gets automated away.

Here’s the economic problem, when AI saves 20 hours on document review, partners face a choice between investing those hours in unpaid training or billing them elsewhere. The math strongly favors billing. Fixed-fee arrangements make this worse, junior lawyers become cost centers rather than revenue generators during their learning phase.

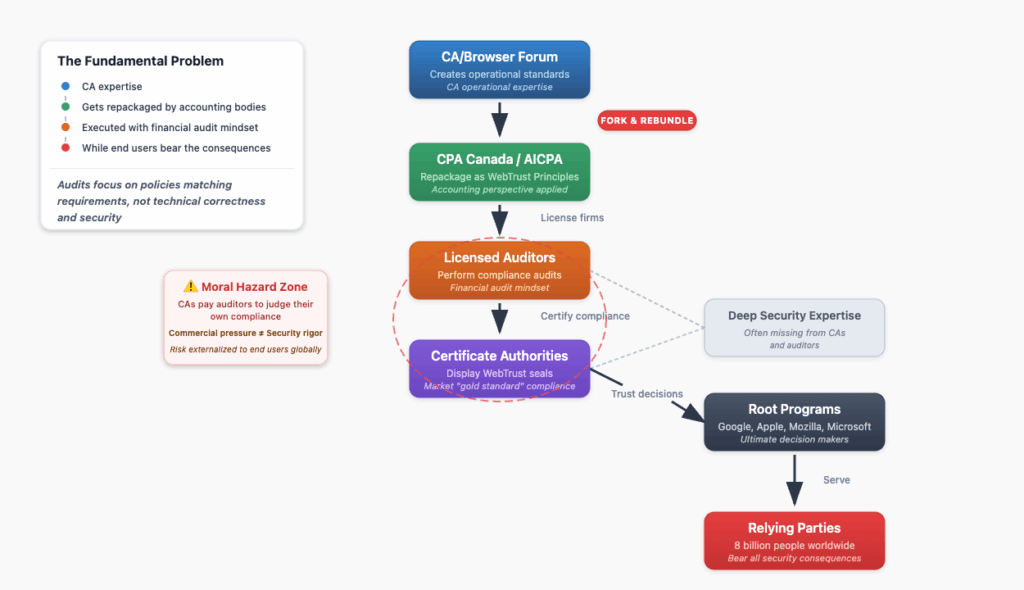

The Governance Crisis

Current legal AI focuses on document creation, research assistance, and drafting support, essentially better word processors and search engines. While impressive, they’ve created a governance burden that’s consuming the time that should be spent teaching threat modeling skills.

This mirrors what I wrote about in compliance “automation asymmetry” where sophisticated AI-generated documents overwhelm human review capacity. Just as automated compliance artifacts can mask underlying issues with perfect formatting, AI legal documents create a veneer of competence that requires more, not less, senior oversight.

Every AI-generated document requires human verification. Partners must create AI policies, review procedures, and verification systems. The American Bar Association found lawyer concerns about AI accuracy increased from 58% in 2023 to 75% in 2025, exposure to these tools has heightened rather than reduced professional anxiety. Firms now maintain an average of 18 different AI solutions, each requiring its own governance framework.

This is like asking security engineers to spend their time verifying automated log reports instead of designing security architectures. Quality control has shifted from collaborative threat assessment to bureaucratic oversight. Instead of senior lawyers working through junior analysis while explaining legal reasoning, we have senior lawyers checking AI output for fabricated cases and subtle errors.

The teaching moments are gone. The efficient combination of quality control and knowledge transfer that characterized traditional review has been broken into separate activities. Senior expertise gets consumed by managing AI rather than developing human threat modeling capabilities.

There’s a deeper concern too. Security engineers know that over-reliance on automated tools can weaken situational awareness and pattern recognition. Legal reasoning requires the same kind of layered understanding, knowing not just what the law says, but how different doctrines interact, how factual variations affect outcomes, how strategic considerations shape arguments. AI can provide correct answers without fostering the threat modeling instincts that distinguish excellent lawyers.

The problem isn’t that we have AI in legal practice, it’s that we have the wrong kind of AI.

Building Better Security Tools

The fundamental problem is architectural. Current legal AI treats legal work as document processing when it’s actually systematic threat assessment. Most legal AI focuses on output, drafting contracts, researching case law, generating briefs. This misses the intellectual core, the systematic risk analysis frameworks that constitute legal reasoning.

Good lawyers, like security engineers, rely on systematic approaches. Constitutional analysis follows specific threat models for government overreach. Contract law has systematic frameworks for identifying formation vulnerabilities, performance risks, and breach scenarios. Tort analysis uses systematic negligence assessment patterns. These frameworks require internalization through guided practice that current AI disrupts.

But imagine different AI, tools designed for threat modeling rather than document creation. Instead of generating contract language, AI that helps identify potential vulnerabilities in proposed terms. Instead of researching cases, AI that systematically maps the legal threat landscape for a particular situation. Instead of drafting briefs, AI that helps build comprehensive defensive arguments while teaching the reasoning patterns that make them effective.

This would change governance entirely. Instead of verifying AI-generated content, lawyers would verify AI-enhanced threat assessments. Systems that show their analytical work (explaining why certain contract clauses create liability exposure, how different factual scenarios affect legal outcomes) enable both quality control and learning.

Security engineers don’t just need better log parsing tools; they need better threat modeling frameworks. Lawyers face the same challenge. The 19th-century apprenticeship model worked because it focused on developing systematic risk assessment capabilities through guided practice with real threats.

The Pattern Continues

This completes a progression I’ve traced across professions, and the pattern is remarkably consistent. In software development, execution capabilities are being liquified, but systems architects who understand complex threat models gain value. In compliance, process expertise is being liquified, but systematic thinkers who can model regulatory interactions across domains have advantages.

In law, legal reasoning itself could be liquified, but the outcome depends on whether we develop AI that enhances threat modeling capabilities rather than just automating document production. The sophisticated legal analysis I love hearing on Advisory Opinions represents systematic risk assessment applied to complex problems. This is exactly the kind of security engineering thinking that creates real value.

The pattern across all three domains is clear, as AI makes execution more liquid, value shifts toward orchestration. In software, orchestrators who build AI-augmented workflows and internal platforms create structural advantages. In compliance, orchestrators who design intelligent systems for continuous assurance gain leverage over reactive, manual approaches.

Current legal AI accidentally creates governance overhead that eliminates mentorship. But reasoning-focused AI could enhance both efficiency and competence development. Instead of making lawyers better document processors, we could make them better security engineers, orchestrators who design systematic threat assessment capabilities rather than just executors who handle individual risks.

The choice matters because society depends on lawyers who can systematically identify legal threats and build robust defenses. Current AI accidentally undermines this by turning lawyers into document reviewers instead of security architects.

The Washington apprenticeship program that first caught my attention represents something important, learning through guided practice with real threats rather than theoretical study. The future may not eliminate apprenticeship but transform it, the first generation learning legal threat modeling through AI designed to build rather than replace systematic reasoning capabilities.

When I listen to Advisory Opinions, I’m hearing security engineers for text working through complex threat assessments. That’s the kind of thinking we need more of, not less. We can build AI that enhances rather than replaces it.