Microsoft was the first Root program in a browser to have an open and transparent process for becoming a CA as well as the first to have public policy, audit and technical requirements that CAs must comply with.

Today while the other browsers have joined on and even raised the bar significantly Microsoft continues to operate their root program in an open and clear way.

One example of this is the list they publish of the companies who meet their requirements; you can see this list here.

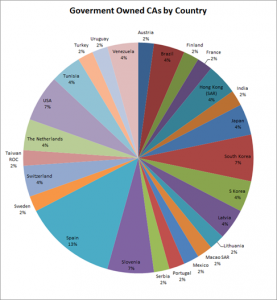

There are a number of interesting things we can gleam from this list; one of them is how many governments have their own certificate authorities.

For example as of March 11, 2011 we know that there are a total of 46 government owned and operated “Root Certificates” in the Microsoft Root Program, these include:

| Current CA Owner | Country | Thumbprint |

| Government of Austria, Austria Telekom-Control Commission | Austria | e7 07 15 f6 f7 28 36 5b 51 90 e2 71 de e4 c6 5e be ea ca f3 |

| Government of Brazil, Autoridade Certificadora Raiz Brasileira | Brazil | 8e fd ca bc 93 e6 1e 92 5d 4d 1d ed 18 1a 43 20 a4 67 a1 39 |

| Government of Brazil, Instituto Nacional de Tecnologia da Informação (ITI) | Brazil | 70 5d 2b 45 65 c7 04 7a 54 06 94 a7 9a f7 ab b8 42 bd c1 61 |

| Government of Finland, Population Register Centre | Finland | fa a7 d9 fb 31 b7 46 f2 00 a8 5e 65 79 76 13 d8 16 e0 63 b5 |

| Government of France | France | 60 d6 89 74 b5 c2 65 9e 8a 0f c1 88 7c 88 d2 46 69 1b 18 2c |

| Government of Hong Kong (SAR), Hongkong Post | Hong Kong (SAR) | d6 da a8 20 8d 09 d2 15 4d 24 b5 2f cb 34 6e b2 58 b2 8a 58 |

| Government of Hong Kong (SAR), Hongkong Post | Hong Kong (SAR) | e0 92 5e 18 c7 76 5e 22 da bd 94 27 52 9d a6 af 4e 06 64 28 |

| Government of India, Ministry of Communications & Information Technology, Controller of Certifying Authorities (CCA) | India | 97 22 6a ae 4a 7a 64 a5 9b d1 67 87 f2 7f 84 1c 0a 00 1f d0 |

| Government of Japan, Ministry of Internal Affairs and Communications | Japan | 96 83 38 f1 13 e3 6a 7b ab dd 08 f7 77 63 91 a6 87 36 58 2e |

| Government of Japan, Ministry of Internal Affairs and Communications | Japan | 7f 8a b0 cf d0 51 87 6a 66 f3 36 0f 47 c8 8d 8c d3 35 fc 74 |

| Government of Korea, Korea Information Security Agency (KISA) | South Korea | 5f 4e 1f cf 31 b7 91 3b 85 0b 54 f6 e5 ff 50 1a 2b 6f c6 cf |

| Government of Korea, Korea Information Security Agency (KISA) | South Korea | 02 72 68 29 3e 5f 5d 17 aa a4 b3 c3 e6 36 1e 1f 92 57 5e aa |

| Government of Korea, Korea Information Security Agency (KISA) | South Korea | f5 c2 7c f5 ff f3 02 9a cf 1a 1a 4b ec 7e e1 96 4c 77 d7 84 |

| Government of Korea, Ministry of Government Administration and Home Affairs (MOGAHA) | South Korea | 63 4c 3b 02 30 cf 1b 78 b4 56 9f ec f2 c0 4a 86 52 ef ef 0e |

| Government of Korea, Ministry of Government Administration and Home Affairs (MOGAHA) | South Korea | 20 cb 59 4f b4 ed d8 95 76 3f d5 25 4e 95 9a 66 74 c6 ee b2 |

| Government of Latvia, Latvian Post | Latvia | 08 64 18 e9 06 ce e8 9c 23 53 b6 e2 7f bd 9e 74 39 f7 63 16 |

| Government of Latvia, Latvian State Radio & Television Centre (LVRTC) | Latvia | c9 32 1d e6 b5 a8 26 66 cf 69 71 a1 8a 56 f2 d3 a8 67 56 02 |

| Government of Lithuania, Registru Centras | Lithuania | 97 1d 34 86 fc 1e 8e 63 15 f7 c6 f2 e1 29 67 c7 24 34 22 14 |

| Government of Macao, Macao Post | Macao SAR | 89 c3 2e 6b 52 4e 4d 65 38 8b 9e ce dc 63 71 34 ed 41 93 a3 |

| Government of Mexico, Autoridad Certificadora Raiz de la Secretaria de Economia | Mexico | 34 d4 99 42 6f 9f c2 bb 27 b0 75 ba b6 82 aa e5 ef fc ba 74 |

| Government of Portugal, Sistema de Certificação Electrónica do Estado (SCEE) / Electronic Certification System of the State | Portugal | 39 13 85 3e 45 c4 39 a2 da 71 8c df b6 f3 e0 33 e0 4f ee 71 |

| Government of Serbia, PTT saobraćaja „Srbija” (Serbian Post) | Serbia | d6 bf 79 94 f4 2b e5 fa 29 da 0b d7 58 7b 59 1f 47 a4 4f 22 |

| Government of Slovenia, Posta Slovenije (POSTArCA) | Slovenia | b1 ea c3 e5 b8 24 76 e9 d5 0b 1e c6 7d 2c c1 1e 12 e0 b4 91 |

| Government of Slovenia, Slovenian General Certification Authority (SIGEN-CA) | Slovenia | 3e 42 a1 87 06 bd 0c 9c cf 59 47 50 d2 e4 d6 ab 00 48 fd c4 |

| Government of Slovenia, Slovenian Governmental Certification Authority (SIGOV-CA) | Slovenia | 7f b9 e2 c9 95 c9 7a 93 9f 9e 81 a0 7a ea 9b 4d 70 46 34 96 |

| Government of Spain (CAV), Izenpe S.A. | Spain | 4a 3f 8d 6b dc 0e 1e cf cd 72 e3 77 de f2 d7 ff 92 c1 9b c7 |

| Government of Spain (CAV), Izenpe S.A. | Spain | 30 77 9e 93 15 02 2e 94 85 6a 3f f8 bc f8 15 b0 82 f9 ae fd |

| Government of Spain, Autoritat de Certificació de la Comunitat Valenciana (ACCV) | Spain | a0 73 e5 c5 bd 43 61 0d 86 4c 21 13 0a 85 58 57 cc 9c ea 46 |

| Government of Spain, Dirección General de la Policía – Ministerio del Interior – España. | Spain | b3 8f ec ec 0b 14 8a a6 86 c3 d0 0f 01 ec c8 84 8e 80 85 eb |

| Government of Spain, Fábrica Nacional de Moneda y Timbre (FNMT) | Spain | 43 f9 b1 10 d5 ba fd 48 22 52 31 b0 d0 08 2b 37 2f ef 9a 54 |

| Government of Spain, Fábrica Nacional de Moneda y Timbre (FNMT) | Spain | b8 65 13 0b ed ca 38 d2 7f 69 92 94 20 77 0b ed 86 ef bc 10 |

| Government of Sweden, Inera AB (SITHS-Secure IT within Health care Service) | Sweden | 16 d8 66 35 af 13 41 cd 34 79 94 45 eb 60 3e 27 37 02 96 5d |

| Government of Switzerland, Bundesamt für Informatik und Telekommunikation (BIT) | Switzerland | 6b 81 44 6a 5c dd f4 74 a0 f8 00 ff be 69 fd 0d b6 28 75 16 |

| Government of Switzerland, Bundesamt für Informatik und Telekommunikation (BIT) | Switzerland | 25 3f 77 5b 0e 77 97 ab 64 5f 15 91 55 97 c3 9e 26 36 31 d1 |

| Government of Taiwan, Government Root Certification Authority (GRCA) | Taiwan ROC | f4 8b 11 bf de ab be 94 54 20 71 e6 41 de 6b be 88 2b 40 b9 |

| Government of The Netherlands, PKIoverheid | The Netherlands | 10 1d fa 3f d5 0b cb bb 9b b5 60 0c 19 55 a4 1a f4 73 3a 04 |

| Government of The Netherlands, PKIoverheid | The Netherlands | 59 af 82 79 91 86 c7 b4 75 07 cb cf 03 57 46 eb 04 dd b7 16 |

| Government of the United States of America, Federal PKI | USA | 76 b7 60 96 dd 14 56 29 ac 75 85 d3 70 63 c1 bc 47 86 1c 8b |

| Government of the United States of America, Federal PKI | USA | cb 44 a0 97 85 7c 45 fa 18 7e d9 52 08 6c b9 84 1f 2d 51 b5 |

| Government of the United States of America, Federal PKI | USA | 90 5f 94 2f d9 f2 8f 67 9b 37 81 80 fd 4f 84 63 47 f6 45 c1 |

| Government of Tunisia, Agence National de Certification Electronique / National Digital Certification Agency (ANCE/NDCA) | Tunisia | 30 70 f8 83 3e 4a a6 80 3e 09 a6 46 ae 3f 7d 8a e1 fd 16 54 |

| Government of Tunisia, Agence National de Certification Electronique / National Digital Certification Agency (ANCE/NDCA) | Tunisia | d9 04 08 0a 49 29 c8 38 e9 f1 85 ec f7 a2 2d ef 99 34 24 07 |

| Government of Turkey, Kamu Sertifikasyon Merkezi (Kamu SM) | Turkey | 1b 4b 39 61 26 27 6b 64 91 a2 68 6d d7 02 43 21 2d 1f 1d 96 |

| Government of Uruguay, Correo Uruguayo | Uruguay | f9 dd 19 26 6b 20 43 f1 fe 4b 3d cb 01 90 af f1 1f 31 a6 9d |

| Government of Venezuela, Superintendencia de Servicios de Certificación Electrónica (SUSCERTE) | Venezuela | dd 83 c5 19 d4 34 81 fa d4 c2 2c 03 d7 02 fe 9f 3b 22 f5 17 |

| Government of Venezuela, Superintendencia de Servicios de Certificación Electrónica (SUSCERTE) | Venezuela | 39 8e be 9c 0f 46 c0 79 c3 c7 af e0 7a 2f dd 9f ae 5f 8a 5c |

With a closer look we see that these 46 certificates are operated by 33 different agencies in 26 countries.

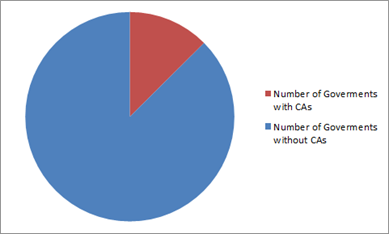

Wikipedia tells us there are 207 governments and now we know apparently 14% of them operate their own globally trusted root.

Though I love to travel and I consider myself a citizen of the world I have never needed to communicate with any of these governments using their private PKIs so I personally have marked them as “revoked” in CryptoAPI, I also manage which of the commercial root CAs I trust manually.

There are some other interesting observations we can gleam from the Root Program membership also, I will do more posts on these later.