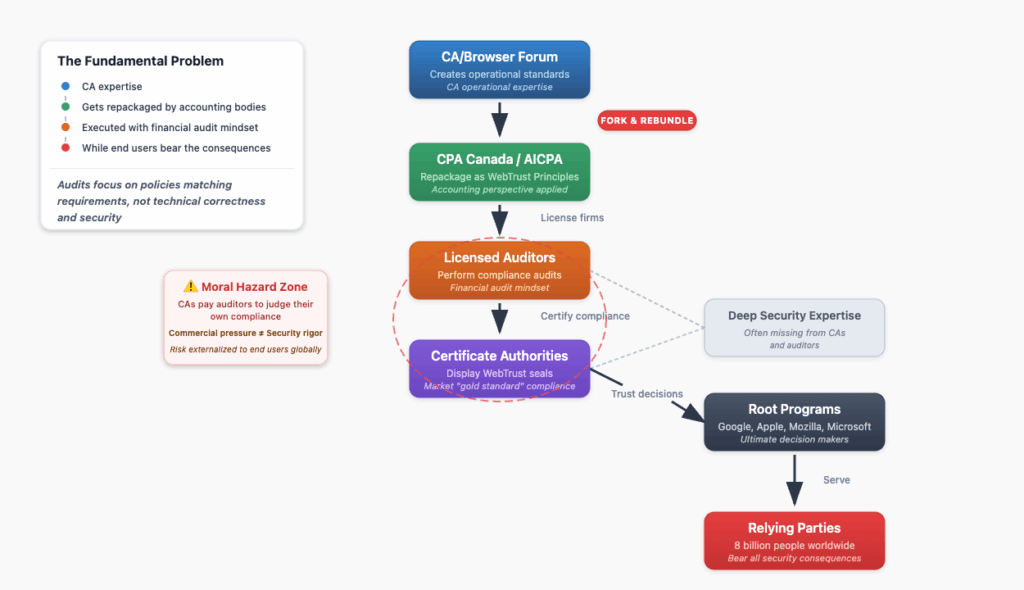

When we discuss the WebPKI, we naturally focus on Certificate Authorities (CAs), browser root programs, and the standards established by the CA/Browser Forum. Yet for these standards to carry real weight, they must be translated into formal, auditable compliance regimes. This is where assurance frameworks enter the picture, typically building upon the foundational work of the CA/Browser Forum.

The WebTrust framework, overseen by professional accounting bodies, is only one way to translate CA/Browser Forum requirements into auditable criteria. In Europe, a parallel scheme relies on the European Telecommunications Standards Institute (ETSI) for the technical rules, with audits carried out by each country’s ISO/IEC 17065-accredited Conformity Assessment Bodies. Both frameworks follow the same pattern: they take the CA/Browser Forum standards and repackage them into structured compliance audit programs.

Understanding the power dynamics here is crucial. While these audits scrutinize CAs, they exercise no direct control over browser root programs. The root programs at Google, Apple, Microsoft, and Mozilla remain the ultimate arbiters. They maintain their own policies, standards, and processes that extend beyond what these audit regimes cover. No one compels the browsers to require WebTrust or ETSI audits; they volunteer because obtaining clean reports from auditors who have seen things in person helps them understand if the CA is competent and living up to their promises.

How WebTrust Actually Works

With this context established, let’s examine the WebTrust model prevalent across North America and other international jurisdictions. In North America, administration operates as a partnership between the AICPA (for the U.S.) and CPA Canada. For most other countries, CPA Canada directly manages international enrollment, collaborating with local accounting bodies like the HKICPA for professional oversight.

These organizations function through a defined sequence of procedural steps: First, they participate in the CA/Browser Forum to provide auditability perspectives. Second, they fork the core technical requirements and rebundle them as the WebTrust Principles and Criteria. Third, they license accounting firms to conduct audits based on these principles and criteria. Fourth, they oversee licensed practitioners through inspection and disciplinary processes.

The audit process follows a mechanical flow. CA management produces an Assertion Letter claiming compliance. The auditor then tests that assertion and produces an Attestation Report, a key data point for browser root programs. Upon successful completion, the CA can display the WebTrust seal.

This process creates a critical misconception about what the WebTrust seal actually signifies. Some marketing approaches position successful audits as a “gold seal” of approval, suggesting they represent the pinnacle of security and best practices. They do not. A clean WebTrust report simply confirms that a CA has met the bare minimum requirements for WebPKI participation, it represents the floor, not the ceiling. The danger emerges when CAs treat this floor as their target; these are often the same CAs responsible for significant mis-issuances and ultimate distrust by browser root programs.

Where Incentives Break Down

Does this system guarantee consistent, high-quality CA operations? The reality is that the system’s incentives and structure actively work against that goal. This isn’t a matter of malicious auditors; we’re dealing with human nature interacting with a flawed system, compounded by a critical gap between general audit principles and deep technical expertise.

Security professionals approach assessments expecting auditors to actively seek problems. That incentive doesn’t exist here. CPA audits are fundamentally designed for financial compliance verification, ensuring documented procedures match stated policies. Security assessments, by contrast, actively hunt for vulnerabilities and weaknesses. These represent entirely different audit philosophies: one seeks to confirm documented compliance, the other seeks to discover hidden risks.

This philosophical gap becomes critical when deep technical expertise meets general accounting principles. Even with impeccably ethical and principled auditors, you can’t catch what you don’t understand. A financial auditor trained to verify that procedures are documented and followed may completely miss that a technically sound procedure creates serious security vulnerabilities.

This creates a two-layer problem. First, subtle but critical ambiguities or absent content in a CA’s Certification Practice Statement (CPS) and practices might not register as problems to non-specialists. Second, even when auditors do spot vague language, commercial pressures create an impossible dilemma: push the customer toward greater specificity (risking the engagement and future revenue), or let it slide due to the absence of explicit requirements.

This dynamic creates a classic moral hazard, an issue similar to the one we explored in our recent post, Auditors are paid by the very entities they’re supposed to scrutinize critically, creating incentives to overlook issues in order to maintain business relationships. Meanwhile, the consequences of missed problems, security failures, compromised trust, and operational disruptions fall on the broader WebPKI ecosystem and billions of relying parties who had no voice in the audit process. This dynamic drives the inconsistencies we observe today and reflects a broader moral hazard problem plaguing the entire WebPKI ecosystem, where those making critical security decisions rarely bear the full consequences of poor choices.

This reality presents a prime opportunity for disruption through intelligent automation. The core problem lies in expertise “illiquidity”, deep compliance knowledge remains locked in specialists’ minds, trapped in manual processes, and is prohibitively expensive to scale.

Current compliance automation has only created “automation asymmetry,” empowering auditees to generate voluminous, polished artifacts that overwhelm manual auditors. This transforms audits from operational fact-finding into reviews of well-presented fiction.

The solution requires creating true “skill liquidity” through AI: not just another LLM, but an intelligent compliance platform embedding structured knowledge from seasoned experts. This system would feature an ontology of controls, evidence requirements, and policy interdependencies, capable of performing the brutally time-consuming rote work that consumes up to 30% of manual audits: policy mapping, change log scrutiny, with superior speed and consistency.

When auditors and program administrators gain access to this capability, the incentive model fundamentally transforms. AI can objectively flag ambiguities and baseline deviations that humans might feel pressured to overlook or lack the skill to notice, directly addressing the moral hazard inherent in the current system. When compliance findings become objective data points generated by intelligent systems rather than subjective judgments influenced by commercial relationships, they become much harder to ignore or rationalize away.

This transformation liquefies rote work, liberating human experts to focus on what truly matters: making high-stakes judgment calls, investigating system-flagged anomalies, and assessing control effectiveness rather than mere documented existence. This elevation transforms auditors from box-checkers into genuine strategic advisors, addressing the system’s core ethical challenges.

This new transparency and accountability shifts the entire dynamic. Audited entities can evolve from reactive fire drills to proactive, continuous self-assurance. Auditors, with amplified expertise and judgment focused on true anomalies rather than ambiguous documentation, can deliver exponentially greater value.

Moving Past the Performance

This brings us back to the fundamental issue: the biggest problem in communication is the illusion that it has occurred. Today’s use of the word “audit” creates a dangerous illusion of deep security assessment.

By leveraging AI to create skill liquidity, we can finally move past this illusion by automating the more mundane audit elements giving space where the assumed security and correctness assessments also happen. We can forge a future where compliance transcends audit performance theater, becoming instead a foundation of verifiable, continuous operational integrity, built on truly accessible expertise rather than scarce, locked-away knowledge.

The WebPKI ecosystem deserves better than the bare minimum. With the right tools and transformed incentives, we can finally deliver it.