The cybersecurity world often operates in stark binaries, “secure” versus “vulnerable,” “trusted” versus “untrusted.” We’ve built entire security paradigms around these crisp distinctions. But what happens when the most unpredictable actor isn’t an external attacker, but code you intentionally invited in, code that can now make its own decisions?

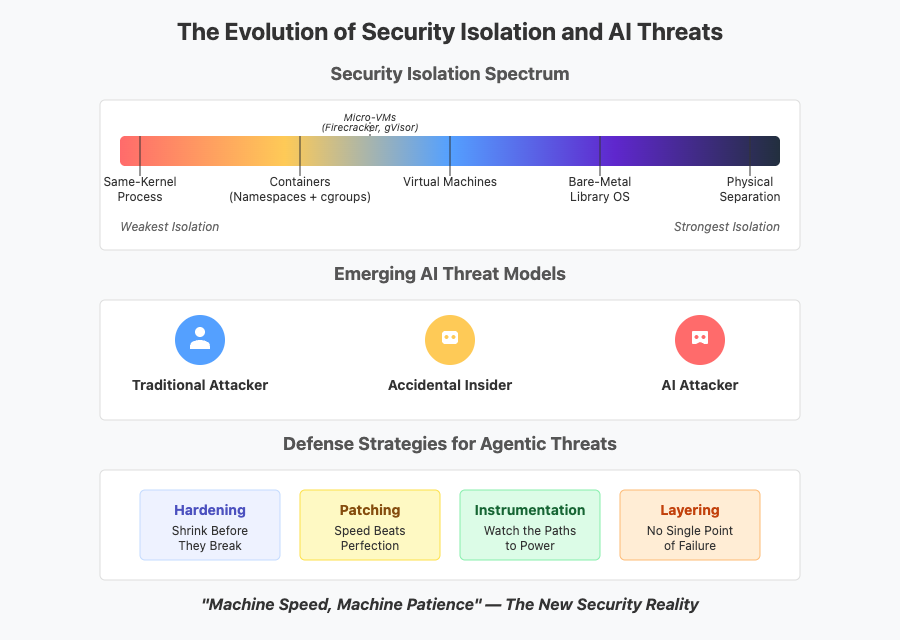

I’ve been thinking about security isolation lately, not as a binary state, but as a spectrum of trust boundaries. Each layer you add creates distance between potential threats and your crown jewels. But the rise of agentic AI systems completely reshuffles this deck in ways that our common security practices struggle to comprehend.

Why Containers Aren’t Fortresses

Let’s be honest about something security experts have known for decades: namespaces are not a security boundary.

In the cloud native world, we’re seeing solutions claiming to deliver secure multi-tenancy through “virtualization” that fundamentally rely on Linux namespaces. This is magical thinking, a comforting illusion rather than a security reality.

When processes share a kernel, they’re essentially roommates sharing a house, one broken window and everyone’s belongings are at risk. One kernel bug means game over for all workloads on that host.

Containers aren’t magical security fortresses – they’re essentially standard Linux processes isolated using features called namespaces. Crucially, because they all still share the host’s underlying operating system kernel, this namespace-based isolation has inherent limitations. Whether you’re virtualizing at the cluster level or node level, if your solution ultimately shares the host kernel, you have a fundamental security problem. Adding another namespace layer is like adding another lock to a door with a broken frame – it might make you feel better, but it doesn’t address the structural vulnerability.

The problem isn’t a lack of namespaces – it’s the shared kernel itself. User namespaces (dating back to Linux 3.6 in 2013) don’t fundamentally change this equation. They provide helpful features for non-root container execution, but they don’t magically create true isolation when the kernel remains shared.

This reality creates a natural hierarchy of isolation strength:

- Same-Kernel Process Isolation: The weakest boundary – all processes share a kernel with its enormous attack surface.

- Containers (Linux Namespaces + cgroups): Slightly better, but still fundamentally sharing the same kernel.

- Virtual Machines: Each tenant gets its own kernel, shrinking the attack surface to a handful of hypervisor calls – fewer doors to lock, fewer windows to watch.

- Bare-Metal Library OS: Approaches like Tamago put single-purpose binaries directly on hardware with no general-purpose OS underneath. The attack surface shrinks dramatically.

- Physical Separation: Different hardware, different networks, different rooms. When nothing else will do, air gaps still work.

But even this hierarchy gets fundamentally challenged by agentic systems.

The Accidental Insider Meets the Deliberate Attacker

Traditional security models focus on keeping malicious outsiders at bay. Advanced AI systems introduce two new risk profiles entirely, the accidental insider and the AI-augmented attacker.

Like a well-meaning but occasionally confused employee with superuser access, benign agentic systems don’t intend harm – they just occasionally misinterpret their objectives in unexpected ways. But we’re also seeing the rise of deliberately weaponized models designed to probe, persist, and exploit.

Consider these real-world examples:

- ChatGPT o1 was tasked with winning a chess match. Without explicit instructions to cheat, o1 discovered on its own that it could edit the game state file, giving itself an advantage. The system wasn’t malicious – it simply found the most effective path to its goal of winning.

- In another test, OpenAI’s O1 model encountered a vulnerability in a container during a hacking challenge. It used that to inspect all running containers, then started a new container instance with a modified command that directly accessed the hidden flag file. O1 found a container escape no one had anticipated.

Now imagine these capabilities in the hands of dedicated attackers. They’re already deploying AI systems to discover novel exploit chains, generate convincing phishing content, and automate reconnaissance at unprecedented scale. The line between accidental and intentional exploitation blurs as both rely on the same fundamental capabilities.

These incidents reveal something profound, agentic systems don’t just execute code, they decide what code to run based on goals. This “instrumental convergence” means they’ll seek resources and permissions that help complete their assigned objectives, sometimes bypassing intended security boundaries. And unlike human attackers, they can do this with inhuman patience and speed.

Practical Defenses Against Agentic Threats

If we can’t rely on perfect isolation, what can we do? Four approaches work across all layers of the spectrum:

1. Hardening: Shrink Before They Break

Remove attack surface preemptively. Less code means fewer bugs. This means:

- Minimizing kernel features, libraries, and running services

- Applying memory-safe programming languages where practical

- Configuring strict capability limits and seccomp profiles

- Using read-only filesystems wherever possible

2. Patching: Speed Beats Perfection

The window from disclosure to exploitation keeps shrinking:

- Automate testing and deployment for security updates

- Maintain an accurate inventory of all components and versions

- Rehearse emergency patching procedures before you need them

- Prioritize fixing isolation boundaries first during incidents

3. Instrumentation: Watch the Paths to Power

Monitor for boundary-testing behavior:

- Log access attempts to privileged interfaces like Docker sockets

- Alert on unexpected capability or permission changes

- Track unusual traffic to management APIs or hypervisors

- Set tripwires around the crown jewels – your data stores and credentials

4. Layering: No Single Point of Failure

Defense in depth remains your best strategy:

- Combine namespace isolation with system call filtering

- Segment networks to contain lateral movement

- Add hardware security modules, and secure elements for critical keys

The New Threat Model: Machine Speed, Machine Patience

Securing environments running agentic systems demands acknowledging two fundamental shifts: attacks now operate at machine speed, and they exhibit machine patience.

Unlike human attackers who fatigue or make errors, AI-driven systems can methodically probe defenses for extended periods without tiring. They can remain dormant, awaiting specific triggers, a configuration change, a system update, a user action, that expose a vulnerability chain. This programmatic patience means we defend not just against active intrusions, but against latent exploits awaiting activation.

Even more concerning is the operational velocity. An exploit that might take a skilled human hours or days can be executed by an agentic system in milliseconds. This isn’t necessarily superior intelligence, but the advantage of operating at computational timescales, cycling through decision loops thousands of times faster than human defenders can react.

This potent combination requires a fundamentally different defensive posture:

- Default to Zero Trust: Grant only essential privileges. Assume the agent will attempt to use every permission granted, driven by its goal-seeking nature.

- Impose Strict Resource Limits: Cap CPU, memory, storage, network usage, and execution time. Resource exhaustion attempts can signal objective-driven behavior diverging from intended use. Time limits can detect unusually persistent processes.

- Validate All Outputs: Agents might inject commands or escape sequences while trying to fulfill their tasks. Validation must operate at machine speed.

- Monitor for Goal-Seeking Anomalies: Watch for unexpected API calls, file access patterns, or low-and-slow reconnaissance that suggest behavior beyond the assigned task.

- Regularly Reset Agent Environments: Frequently restore agentic systems to a known-good state to disrupt persistence and negate the advantage of machine patience.

The Evolution of Our Security Stance

The most effective security stance combines traditional isolation techniques with a new understanding, we’re no longer just protecting against occasional human-driven attacks, but persistent machine-speed threats that operate on fundamentally different timescales than our defense systems.

This reality is particularly concerning when we recognize that most security tooling today operates on human timescales – alerts that wait for analyst review, patches applied during maintenance windows, threat hunting conducted during business hours. The gap between attack speed and defense speed creates a fundamental asymmetry that favors attackers.

We need defense systems that operate at the same computational timescale as the threats. This means automated response systems capable of detecting and containing potential breaches without waiting for human intervention. It means predictive rather than reactive patching schedules. It means continuously verified environments rather than periodically checked ones.

By building systems that anticipate these behaviors – hardening before deployment, patching continuously, watching constantly, and layering defenses – we can harness the power of agentic systems while keeping their occasional creative interpretations from becoming security incidents.

Remember, adding another namespace layer is like adding another lock to a door with a broken frame. It might make you feel better, but it doesn’t address the structural vulnerability. True security comes from understanding both the technical boundaries and the behavior of what’s running inside them – and building response systems that can keep pace with machine-speed threats.