In 1995, SSL was introduced, and it took 21 years for 40% of web traffic to become encrypted. This rate changed dramatically in 2016 with Let’s Encrypt and the adoption of ACME, leading to an exponential increase in TLS usage. In the next 8 years, adoption nearly reached 100% of web traffic. Two main factors contributed to this shift: first, a heightened awareness of security risks due to high-profile data breaches and government surveillance, creating a demand for better security. Second, ACME made obtaining and maintaining TLS certificates much easier.

Similarly, around 2020, the SolarWinds incident highlighted the issue of software supply chain security. This, among other factors, led to an increase in the adoption of code signing technologies, an approach that has been in use at least since 1995 when Microsoft used this approach to help deal with the problem of authenticity as we shifted away from CDs and floppy disks to network-based distributions of software. However, the complexity and cost of using code signing severely limited its widespread use, and where it was used, thanks to poor tooling, key compromises often led to a failure for most deployments to achieve the promised security properties. Decades later, projects like Binary Transparency started popping up and, thanks to the SolarWinds incident, projects that spun out of that like Go ChecksumDB, SigStore, and SigSum projects led to more usage of code signing.

Though the EU’s digital signature laws in 1999 specified a strong preference for cryptographic-based document signing technologies, their adoption was very limited, in part due to the difficulty of using the associated solutions. In the US, the lack of a mandate for cryptographic signatures also resulted in an even more limited adoption of this more secure approach to signing documents and instead relied on font-based signatures. However, during the COVID-19 pandemic, things started changing; in particular, most states adopted remote online notary laws, mandating the use of cryptographic signatures which quickly accelerated the adoption of this capability.

The next shift in this story started around 2022 when generative AI began to take off like no other technology in my lifetime. This resulted in a rush to create tools to detect this generated content but, as I mentioned in previous posts [1,2], this is at best an arms race and more practically intractable on a moderate to long-term timeline.

So, where does this take us? If we take a step back, what we see is that societally we are now seeing an increased awareness of the need to authenticate digital artifacts’ integrity and origin, just like we saw with the need for encryption a decade ago. In part, this is why we already see content authentication initiatives and discussions, geared for different artifact types like documents, pictures, videos, code, web applications, and others. What is not talked about much is that each of these use cases often involves solving the same core problems, such as:

- Verifying entitlement to acquire the keys and credentials to be used to prove integrity and origin.

- Managing the logical and physical security of the keys and associated credentials.

- Managing the lifecycle of the keys and credentials.

- Enabling the sharing of credentials and keys across the teams that are responsible for the objects in question.

- Making the usage of these keys and credentials usable by machines and integrating naturally into existing workflows.

This problem domain is particularly timely in that the rapid growth of generative AI has raised the question for the common technology user — How can I tell if this is real or not? The answer, unfortunately, will not be in detecting the fakes, because of generative AIs ability to create content that is indistinguishable from human-generated work, rather, it will become evident that organizations will need to adopt practices, across all modalities of content, to not only sign these objects but also make verifying them easy so these questions can be answered by everyday users.

This is likely to be accelerated once the ongoing shifts take place in the context of software and service liability for meeting security basics. All of this seems to suggest we will see broader adoption of these content authentication techniques over the next decade if the right tools and services are developed to make adoption, usage, and management easy.

While no crystal ball can tell us for sure what the progression will look like, it seems not only plausible but necessary in this increasingly digital world where the lines between real and synthetic content continue to blur that this will be the case.

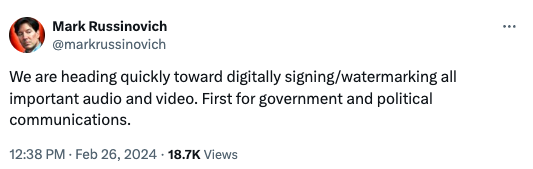

Update: Just saw this while checking out my feed on X and it seems quite timely 🙂