One of my favorite quotes about cryptography is this one from Bruce Schneier where he says:

“If you think cryptography can solve your problem, then you don’t understand your problem and you don’t understand cryptography.”

The point he is getting at is often times the introduction of cryptography carries its own baggage that can itself be a problem to manage. One of the larger issues one is exposed to is that of Key Management.

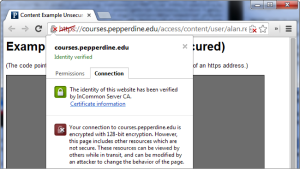

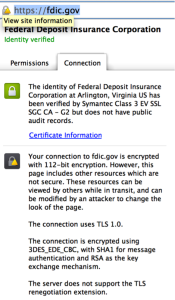

Many of the key management practices we use today were actually designed around the concepts of offline keys. You see exchanging keys securely is hard and it’s human nature to avoid hard things so we (either explicitly or implicitly) choose to do them infrequently. For example take a look at TLS private keys — The single most prominent “upgrade” on most CA websites is a longer lived certificate (as much as 3 years per certificate).

People just don’t want to hassle with the idea of getting a new certificate and renewing it. The lifetimes of these certificates are well within the current guidance for crypto effectiveness but there are other factors to be considered when looking at cryptoperiods beyond how strong the cryptography is.

The reality is that crypto itself is seldom the direct attack vector it is application logic, coding defects and operational practices that prove to be the source of most vulnerabilities.

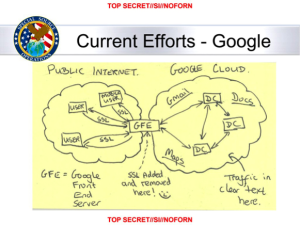

For this reason surely how that key is protected is the most important factor. If “anyone” can access a key encrypting or signing data with that key is nothing more than security theater. When you consider that remember today for keys to be used they must be accessible to application logic. The key is exposed to the risks of the full software and hardware stack that runs supports that service. As a result if that system is exposed to the internet it should be changed more frequently than one that is in a locked box at a bank.

The key itself doesn’t actually have to be exposed in its raw form either, for example if malware can turn the software that has access to the key into a signing oracle it doesn’t need raw access to the key — this is actually what happened to DigiNotar, the Dutch CA who was compromised the bad guy got into the system that had access to the HSM containing the CA keys and was able to sign virtually anything they wanted.

So what do we do about this? Of course one needs to build systems using a process that incorporates security into all aspects of product development and operations but above and beyond that you really should change your keys as often as possible.

Fundamentally the longer a key is trusted the more valuable it is to an attacker and the more opportunity an attacker has had to compromise that key.

It is this paradigm that necessitates the existence of revocation protocols like OCSP in X.509. The CABFORUM allows these revocation messages to be good for up-to a week. This is important to understand because a CA’s ability to revoke a certificate effectively in the event a compromise is identified is limited by this. If the CA instead issued certificates that were good for no longer than a week then there would in-essence be no need for revocation checking at all.

If you can issue certificates that are good for a week and change them reliably each week why not do shorter? What about certificates and keys that are trusted for only a few hours or minutes? Surely this would be better. This significantly reduces the value to the attacker and increases the amount of trust one can place in that certificate.

The same holds true for certificates that are stored on Smartcards and Hardware Security Modules; the more recently the key was created and the crypto operator authenticated the more trustworthy they key is.

If that’s the case why is it we still manage keys like they are on hardened offline systems? The answer is simple — Key Management is hard. What’s important to understand that while it is hard it is doable we just need the will to do something about it as an industry.

NOTE: Though in my examples above I use certificates as the canonical example they are just that examples; the exact same issues exist with all uses of cryptographic keys (encryption keys, bitcoin wallets, authentication keys, etc.).