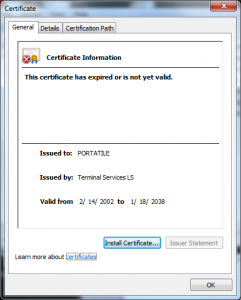

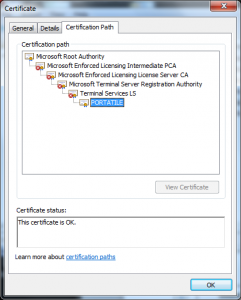

I wanted to do a post on how the Terminal Services Licensing PKI could be used in another attack; though the variants are endless I have one concrete example of how this was used in in an attack in 2002 in this certificate:

What we see in this certificate is that the attacker took the License Certificate (Terminal Services LS) and issued himself a certificate underneath it. This only worked because of another defect in the Windows chain engine, this one relating to how it handled Basic Constraints (see MS 02-050) – that was fixed in 2003.

But why would he have done this? I see two possible reasons:

- Bypassing the terminal services licensing mechanism.

- Bypassing some client authentication scheme based on X.509.

We won’t explore #1 as I am not interested in the attacks on license revenue for Microsoft but how this infrastructure designed to protect that license revenue was used to attack customers and as such I will focus on #2.

Now, before I go much further I have to be clear – I am just guessing here, I don’t have enough data to be 100% sure of what this was used for but it seems pretty likely I am right.

What I see here is the X.509 serial number is different than the Serial Number RDN the attacker put into the Subject’s DN.

This certificate also has a value in the CN of the Subject DN that looks like it is a user name (PORTATILE).

Neither of these should be necessary to attack the licensing mechanism as legitimate license certificates don’t have these properties or anything that looks roughly like them.

My theory is that the attacker found some Windows Server configured to do client authentication (possibly for LDAPS or HTTPS) and wanted to trick the server into mapping this certificate into the principal represented by that CN and Serial Number in the DN.

Why would this have worked? Well as I said there was the Basic Constraints handling defect, but that wouldn’t have been enough the server would have had to had blindly trusted all the root certificates in the root store for “all usages”.

This was a fairly common practices back then, web servers and other server applications would be based on other libraries (for example SSLEAY/OpenSSL) and just import all the roots in the CryptoAPI store and use them with this other library.

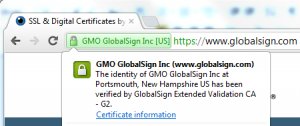

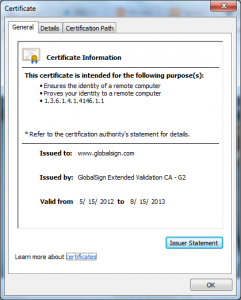

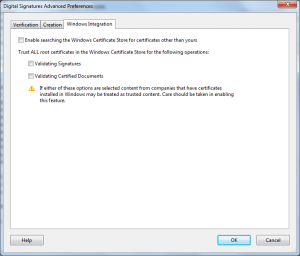

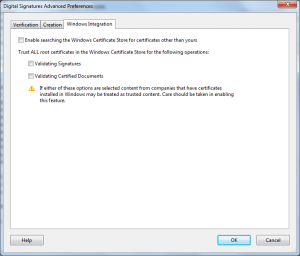

This is actually still done by some applications today, for example Adobe Reader X:

The problem with this behavior is that these applications do not necessarily (read almost never) import the properties associated with these certificates, nor do they support the same enforcement constraints (for example Nested Extended Key usages).

The net effect of which is that when they import the roots they end up trusting them for more than the underlying Windows subsystem does.

The attackers in this case were likely taking advantage of this bad design of this client authentication solution as well as the bad design of the Terminal Services Licensing PKI.

The combination of which would have allowed the attacker to impersonate the user PORTATILE and perform any actions as that user they liked.

Even if I am wrong in this particular case, this sort of thing was definitely possible.

What lessons should we take away from this?

For one always do a formal security review of your solutions as you build them as well as before you deploy them; pay special attention to your dependencies – sometimes they are configuration as well as code.

Also if an issue as fundamental as the Terminal Services Licensing PKI can fly under the radar of a world class security program like Microsoft’s you probably have some sleepers in your own infrastructure too – go take a look once more for the assumptions of the past before they bite you too.

Ryan