Today the defacto-standard for purchasing criteria for a cryptographic component is a US Federal Standard called FIPS 140-2. This is set of assurance levels the US Federal Government uses to ensure that government agencies purchase cryptographic products that are interoperable and address threat-specific risks; Europe has similar set of guidelines called Common Criteria.

These standards were adopted by the security industry because in the beginning the only purchasers of their products were government agencies and if you did not design your products to meet these requirements your product wouldn’t even be considered by your only customer segment.

As the security industry began selling outside of government agencies they started with the Fortune 50 because they were the only ones who understood the risks their businesses were exposed to. This was a time when information security was in-essence a new discipline and the only tried and true examples these organizations had to learn from were from the government and military. For this reason the solutions that were sold and deployed were watered down versions of what they sold to governments.

As the awareness of security risks spread to the rest of the corporate world these same foundational standards continued to be used — in many respects without question. In fact I am always surprised how many customers I encounter who have mandated a specific FIPS assurance level be supported by a product that have no understanding of what protection each level provides.

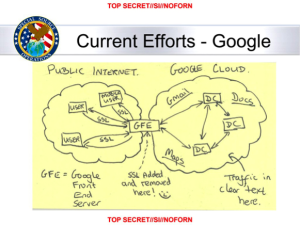

With the Snowden revelations people are now starting to question these standing assumptions. Should we be using cryptography that is specified governments at all? Is our adoption of government approved cryptography making us more secure or is it exposing us to new risks?

The real questions we must be asking ourselves are:

- What is the actual (vs perceived) threat model?

- Where are the assets that are valuable to the attacker in my system?

- Are we applying security technology and approaches in a balanced way relative to the risks?

- What are the consequences of each of the design decisions we are making?

Our reliance on blanket adoption of standards like FIPS 140-2 are in many respects a way to make ourselves feel better about not spending the time to answer the first two questions and the last two questions represent areas where most organizations fall down.

First let me temper what I am about to say with I still believe FIPS 140-2 and Common Criteria have value and they are good solutions for what they were designed for but in many cases they are a round peg in a square hole.

Let’s start this by first understanding the claims and the values of each:

Third-party evaluated – An organization deemed knowledgeable and capable by the government has reviewed the design relative to the stated requirements and found no unresolved issues.

Approved Algorithms – Supports a set of algorithms that the government has decided are necessary for interoperability. The selection of these algorithms by the government is plausibly a result of a rigorous process that determined they are sufficiently secure for their needs. Ex: RSA, ECC /w secp256r1, SHA2, etc.

Uses Approved Algorithms and Methods to Protect Keys – Uses a set of algorithms and approaches the government has decided are sufficient to keep keys of the types specified in approved algorithms secure. Ex: Use crypto and methods at least as strong as the keys being protected.

Production-Grade Components – An attempt to specify a qualitative set of requirements that are intended to ensure there is adequate quality in the solution to be used in production.

Tamper Evidence – Implements mechanisms such as seals and manufacturing techniques that make it visibly obvious that the device has been physically compromised.

Protects Once Compromised – Implements mechanisms that make it difficult to extract the keys from the device once it is physically compromised.

Tamper resistant – Implements mechanisms to destroy the protected keys when a compromise is attempted.

The following table shows you how these traits map across the various FIPS 140-2 assurance levels:

| Third-party evaluated | Approved Algorithms | Uses Approved Algorithms and Methods to Protect Keys | Production-Grade Components | Tamper Evidence | Protects Once Compromised | Tamper resistant | |

| Level 1 | x | x | x | x | |||

| Level 2 | x | x | x | x | x | ||

| Level 3 | x | x | x | x | x | x | |

| Level 4 | x | x | x | x | x | x | x |

Now each of these traits are desirable but they may also have consequences, for example:

Third-party evaluated – These audits take up to a year to prepare for and complete. Due to the specialized nature and near-monopoly the approved testers have the tests are incredibly expensive. Additionally these testing agencies perform their tasks based on guidelines based published by governments who are very slow to adapt and change and focused on their own immediate needs which restricts innovation.

This all becomes very complicated when you need to respond to security issues in short periods of time and many have come to the conclusion the bureaucracy associated with completing these audits reduces security.

Approved Algorithms – While I am pleased with the fact that NIST runs crypto competitions in some cases they are not used and in others their choices may not be right for you. Additionally there are questions about some of their decisions and what they mean to the security of the algorithms they implement.

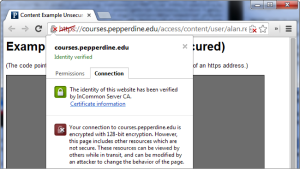

In other cases the requirements may actually hamper adoption of your solution and while the product may be “more secure” it will not be usable by in many cases. A great example being it is only possible to have a software only solution that is evaluated to FIPS 140-2 Level 1 so if you specify anything higher you may significantly reduce the usability and applicability of your solution.

The important thing to remember is there are many ways to mitigate a risk and if we are not careful to take a step back and take a look at the problem and goals as a whole we might as they say miss the forest through the trees.

For example if we come to the conclusion that we require the use of a FIPS 140-2 Level 4 device we preclude the un-augmented use of every Windows or ChromeOS computer that has a TPM when arguably that would expose the product to hundreds of millions of more users. Is the increased security of that that choice worth the it?

Also if we look at the Tamper Evidence, Protects Once Compromised and Tamper resistant goals we can mitigate these risks significantly if we simply generate new keys every 15 minutes. By doing this we reduce the risk of compromise to a very small window and we reduce the value of the key to the attacker.

It’s this last approach I think we should as an industry apply more now; we no longer live in a world of disconnected systems. We are dynamically deploying services using technologies like Docker, Chef, and Puppet and there is no reason we can not deploy our keys to systems and users dynamically as well.