This morning I noticed a tweet by Mikko about the Windows Update certificate chain looking odd so I decided to take a look myself.

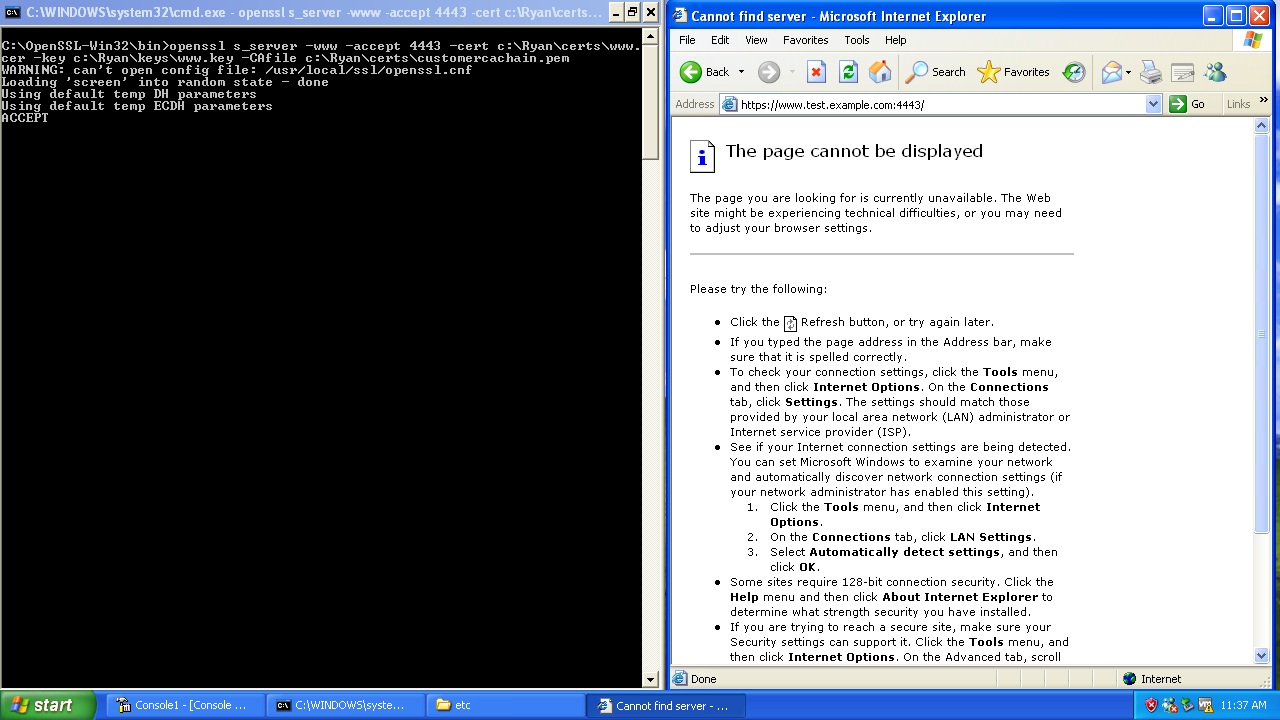

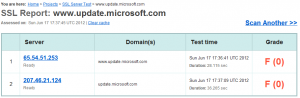

I started with the webserver configuration using SSLLABS, unfortunately it did not fare well:

Looking a little closer we see a few things of interest:

- SSLLABS is unable to validate the certificate

- The server is using weak ciphers

- The server is vulnerable to the BEAST attack

- The server is not using an Extended Validation (EV) Certificate

- The server is supporting SSL 2.0

To understand the specifics here we needed to look a little deeper, the OpenSSL s_client is a great tool for this:

openssl s_client –showcerts -status –connect www.update.microsoft.com:443

Loading ‘screen’ into random state – done

CONNECTED(0000017C)

OCSP response: no response sent

depth=1 C = US, ST = Washington, L = Redmond, O = Microsoft Corporation, CN = Microsoft Update Secure Server CA 1

verify error:num=20:unable to get local issuer certificate

verify return:0

—

Certificate chain

0 s:/C=US/ST=Washington/L=Redmond/O=Microsoft/OU=WUPDS/CN=www.update.microsoft.com

i:/C=US/ST=Washington/L=Redmond/O=Microsoft Corporation/CN=Microsoft Update Secure Server CA 1

1 s:/C=US/ST=Washington/L=Redmond/O=Microsoft Corporation/CN=Microsoft Update Secure Server CA 1

i:/DC=com/DC=microsoft/CN=Microsoft Root Certificate Authority

—

Server certificate

—–BEGIN CERTIFICATE—–

MIIF4TCCA8mgAwIBAgITMwAAAAPxs7enAjT5gQAAAAAAAzANBgkqhkiG9w0BAQUF

…

—–END CERTIFICATE—–

1 s:/C=US/ST=Washington/L=Redmond/O=Microsoft Corporation/CN=Microsoft Update S

ecure Server CA 1

i:/DC=com/DC=microsoft/CN=Microsoft Root Certificate Authority

—–BEGIN CERTIFICATE—–

MIIGwDCCBKigAwIBAgITMwAAADTNCXaXRxx1YwAAAAAANDANBgkqhkiG9w0BAQUF

…

—–END CERTIFICATE—–

subject=/C=US/ST=Washington/L=Redmond/O=Microsoft/OU=WUPDS/CN=www.update.microsoft.com issuer=/C=US/ST=Washington/L=Redmond/O=Microsoft Corporation/CN=Microsoft Update

Secure Server CA 1

—

No client certificate CA names sent

—

SSL handshake has read 3403 bytes and written 536 bytes

—

New, TLSv1/SSLv3, Cipher is AES128-SHA

Server public key is 2048 bit

Secure Renegotiation IS supported

Compression: NONE

Expansion: NONE

SSL-Session:

Protocol : TLSv1

Cipher : AES128-SHA

Session-ID: 33240000580DB2DE3D476EDAF84BEF7B357988A66A05249F71F4B7C90AB62986

Session-ID-ctx:

Master-Key: BD56664815654CA31DF75E7D6C35BD43D03186A2BDA4071CE188DF3AA296B1F9674BE721C90109179749AF2D7F1F6EE5

Key-Arg : None

PSK identity: None

PSK identity hint: None

Start Time: 1339954151

Timeout : 300 (sec)

Verify return code: 20 (unable to get local issuer certificate)

—

read:errno=10054

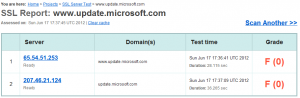

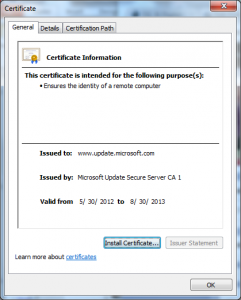

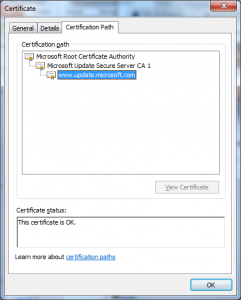

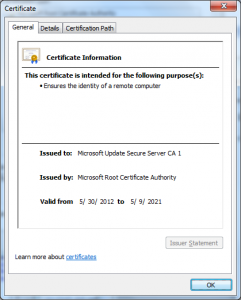

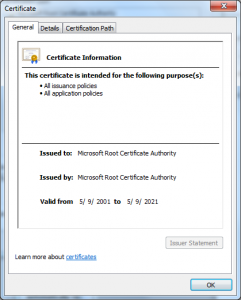

With this detail we can also look at the certificates with the Windows Certificate viewer, we just extract the server certificate Base64 and put it into a text file with a .cer extension and open it with Explorer:

From these we see a few additional things:

- OCSP Stapling is not enabled on the server

- The issuing CA was created on 5/30/2012 at 8:49pm

- The issuing CA was issued by the 2001 SHA1 “Microsoft Root Authority”

So with this extra information let’s tackle each of these observations and see what conclusions we come to.

SSLLABS is unable to validate the certificate; there are two possible reasons:

a. The server isn’t including the intermediate certificates (it is) and SSLLABS doesn’t chase intermediates specified in the AIA:IssuerCert extension (doubt it does) or that extension isn’t present (it is).

b. The Root CA isn’t trusted by SSLLABS (which appears to be the case here).

My guess based on this is that Ivan only included the certificates in the “Third-Party Root Certification Authorities” store and did not include those in the “Trusted Root Certification Authorities” which are required for Windows to work.

Basically he never expected these Roots to be used to authenticate a public website.

[2:00 PM 6/18/2012] Ivan has confirmed he currently only checks the Mozilla trusted roots, therefor this root wouldn’t be trusted by SSLLABS.

Microsoft’s decision to use this roots means that any browser that doesn’t use the CryptoAPI certificate validation functions (Safari, Opera, Chrome on non-Windows platforms, Firefox, etc.) will fail to validate this certificate.

This was probably done to allow them to do pinning using the “Microsoft” policy in CertVerifyCertificateChainPolicy.

I believe this was not the right approach since I think it’s probably legitimate to use another browser to download patches.

[2:00 PM 6/18/2012] The assumption in this statement (and it may turn out I am wrong) is that it is possible for someone to reach a path where from a browser they can download patches; its my understanding this is an experience that XP machines using a different browser have when visiting this URL I — I have not verified this.

[3:00 PM 6/18/2012] Harry says that you have not been able to download from these URLs without IE ever, so this would be a non-issue if that is the case.

To address this Microsoft would need to either:

- Have their PKI operate in accordance with the requirements that other CAs have to meet and be audited and be found to meet the requirements of each of the root programs that are out there.

- Have two separate URLs and certificate chains one for the website anchored under a publicly trusted CA and another under this private “Product” root. The manifests would be downloaded from the “Product” root backed host and the web experience would be from the “Public” root backed host.

- Cross certifying the issuing CA “Microsoft Update Secure Server CA 1” under a public CA also (cross certification), for example under their IT root that is publically trusted and include that intermediate in the web server configuration also. Then have a CertVerifyCertificateChainPolicy implementation that checks for that CA instead of the “Product” roots.

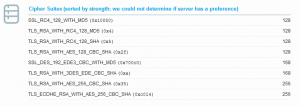

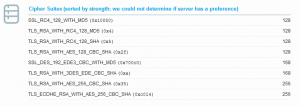

The server is using weak ciphers; the server is using several weak ciphers:

I see no reason to support the MD5 based ciphers as I find it hard to believe that there are any clients that can communicate with this site that do not support their SHA1 equivalents.

[2:00 PM 6/18/2012] I have been told I am too critical by calling these MD5 based ciphers as weak in that they are used as HMAC, it is true that when used with a key as is the case with HMAC the current attacks are not relevant. With that said any client that supports these suites will also support their SHA1 counterpart and there is no reason to support the weaker suites that use MD5.

The server is vulnerable to the BEAST attack; and SSLLABS isn’t able to tell if the server is specifying a cipher suite preference, this means it probably is not.

It is the cipher suite ordering issue that is actually resulting in the warning about the BEAST attack though. It is addressed by putting RC4 cipher suites at the top of the cipher suite order list.

[2:00 PM 6/18/2012] It’s been argued the BEAST attack isn’t relevant here because the client is normally not a browser, these pages that are returned do contain JS and there are cases where users visit it via the browser — otherwise there would not be HTML and JS in them. As such the attacker could use the attack to influence you to install malicious content as if it came from Microsoft. Maybe its not a leakage of personal information initially but its an issue.

It is not using an Extended Validation (EV) Certificate; this is an odd one, is an EV certificates necessary when someone is attesting to their own identity? Technically I would argue no, however no one can reasonably expect a user to go and look at a certificate chain and be knowledgeable enough to that this is what is going on.

The only mechanism to communicate the identity to the user in as clear a way is to make the certificate be an EV certificate.

Microsoft really should re-issue this certificate as an EV certificate – if there was ever a case to be sure who you are talking to it would certainly include when you are installing kernel mode drivers.

The server is supporting SSL 2.0; this also has to be an oversight in the servers configuration of SSL 2.0 has been known to have numerous security issues for some time.

They need to disable this weak version of SSL.

OCSP Stapling is not enabled on the server; OCSP stapling allows a webserver to send its own revocations status along with its certificate improving performance, reliability and privacy for revocation checking. According to Netcraft Windows Update is running on IIS 7 which supports it by default.

This means Microsoft is either not allowing these web servers to make outbound connections or they have explicitly disabled this feature (login.live.com has it enabled and working). While it is not a security issue per-se enabling it certainly is a best practice and since it’s on by default it seems they are intentionally not doing it for some reason.

The issuing CA was created on 5/30/2012 at 8:49pm; this isn’t a security issue but it’s interesting that the issuing CA was created four days before the Flame Security advisory. It was a late night for the folks operating the CA.

That’s it for now,

Ryan