Recently Facebook announced that they will be moving to Always-On-SSL, I for one am thrilled to see this happen – especially given how much personal data can be gleamed from observing a Facebook session.

When they announced this change they mentioned that users may experience a small performance tax due to the addition of SSL. This is unfortunately true, but when a server is well configured that tax should be minimal.

This performance tax is particularly interesting when you put it in the context of revenue, especially when you consider that Amazon found that every 100ms of latency cost them 1% of sales. What if the same holds true for Facebook? Their last quarter revenue was 1.23 billion, I wanted to take a few minutes and look at their SSL configuration to see what this tax might cost them.

First I started with WebPageTest; this is a great resource for the server administrator to see where time is spent when viewing a web page.

The way this site works is it downloads the content twice, using real instances of browsers, the first time should always be slower than the second since you get to take advantage of caching and session re-use.

The Details tab on this site gives us a break down of where the time is spent (for the first use experience), there’s lots of good information here but for this exercise we are interested in only the “SSL Negotiation” time.

Since Facebook requires authentication to see the “full experience” I just tested the log-on page, it should accurately reflect the SSL performance “tax” for the whole site.

I ran the test four times, each time summing the total number of milliseconds spent in “SSL Negotiation”, the average of these three runs was 4.111 seconds (4111 milliseconds).

That’s quite a bit but can we reduce it? To find out we need to look at their SSL configuration; when we do we see a few things they could do to improve things, these include:

- Enabling SPDY – SPDY could help with performance on mobile where latency is a real problem.

- Enabling OCSP Stapling – Enabling OCSP stapling would remove one of the certificate status checks clients need to do before downloading the content.

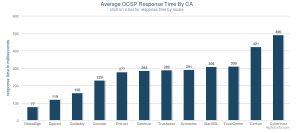

- Switching to a faster CA – For a browser to validate Facebook’s certificate it has to contact the CA who issued it to check if it’s still good this can introduce delays in the user getting to the site.

Let’s explore this last point more, the status check the browser does is called an OCSP request. For the last 24 hours their current CA had an average world-wide OCSP response time of 287 ms, if they used OCSP Stapling the browser would need to do only one OCSP request, even with that optimization that request could be up to 7% of the SSL performance tax.

Let’s explore this last point more, the status check the browser does is called an OCSP request. For the last 24 hours their current CA had an average world-wide OCSP response time of 287 ms, if they used OCSP Stapling the browser would need to do only one OCSP request, even with that optimization that request could be up to 7% of the SSL performance tax.

Globalsign’s average world-wide OCSP response time for the same period was 68 milliseconds, which in this case could have saved 219 ms. To put that in context Facebook gets 1.6 billion visits each week. If you do the math (219 * 1.6 billion / 1000 / 60 / 24), that’s 12.7 million days’ worth of time saved every year. Or put another way, it’s a lifetime worth of time people would have otherwise spent waiting for Facebook pages to load saved every two and a half hours!

If we consider that in the context of the Amazon figure simply changing their CA could be worth nearly one hundred million a year.

Before you start to pick apart these numbers let me say this is intended to be illustrative of how performance can effect revenue and not be a scientific exercise, so to save you the trouble some issues with these assumptions include:

- Facebook’s business is different than Amazons and the impact on their business will be different.

- I only did four samples of the SSL negotiation and a scientific measurement would need more.

- The performance measurement I used for OCSP was an average and not what was actually experienced in the sessions I tested – It would be awesome if WebPageTest could include a more granular breakdown of the SSL negotiation.

With that said clearly even without switching there are a few things Facebook still can do to improve how they are deploying SSL.

Regardless I am still thrilled Facebook has decided to go down this route, the change to deploy Always-On-SSL will go a long way to help the visitors to their sites.

Ryan