I’ve been in the PKI space for a long time, and I’ll be honest, digging through Certificate Policies (CPs) and Certification Practice Statements (CPSs) is far from my favorite task. But as tedious as they can be, these documents serve real, high-value purposes. When you approach them thoughtfully, the time you invest is anything but wasted.

What a CPS Is For

Beyond satisfying checkbox compliance, a solid CPS should:

- Build trust by showing relying parties how the CA actually operates.

- Guide subscribers by spelling out exactly what is required to obtain a certificate.

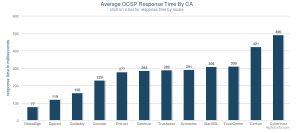

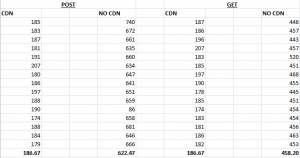

- Clarify formats by describing certificate profiles, CRLs, and OCSP responses so relying parties know what to expect.

- Enable oversight by giving auditors, root store programs, and researchers a baseline to compare against real-world issuance.

If a CPS fails at any of these, it fails in its primary mission.

Know Your Audience

A CPS is not just for auditors. It must serve subscribers who need to understand their obligations, relying parties weighing whether to trust a certificate, and developers, security researchers, and root store operators evaluating compliance and interoperability.

The best documents speak to all of these readers in clear, plain language without burying key points under mountains of boilerplate.

A useful parallel is privacy policies or terms of service documents. Some are written like dense legal contracts, full of cross-references and jargon. Others aim for informed consent and use plain language to help readers understand what they are agreeing to. CPs and CPSs should follow that second model.

Good Examples Do Exist

If you’re looking for CPS documents that get the basics right, Google Trust Services and Fastly are two strong models:

There are many ways to evaluate a CPS, but given the goals of these documents, fundamental tests of “good” would certainly include:

- Scope clarity: Is it obvious which root certificates the CPS covers?

- Profile fidelity: Could a reader recreate reference certificates that match what the CA actually issues?

Most CPSs fail even these basic checks. Google and Fastly pass, and their structure makes independent validation relatively straightforward. Their documentation is not just accurate, it is structured to support validation, monitoring, and trust.

Where Reality Falls Short

Unfortunately, most CPSs today don’t meet even baseline expectations. Many lack clear scope. Many don’t describe what the issued certificates will look like in a way that can be independently verified. Some fail to align with basics like RFC 3647, the framework they are supposed to follow.

Worse still, many CPS documents fail to discuss how or if they meet requirements they claim compliance with. That includes not just root program expectations, but also standards like:

- Server Certificate Baseline Requirements

- S/MIME Baseline Requirements

- Network and Certificate System Security Requirements

These documents may not need to replicate every technical detail, but they should objectively demonstrate awareness of and alignment with these core expectations. Without that, it’s difficult to expect trust from relying parties, browsers, or anyone else depending on the CA’s integrity.

Even more concerning, many CPS documents don’t fully reflect the requirements of the root programs that grant them inclusion:

The Cost of Getting It Wrong

These failures are not theoretical. They have led to real-world consequences.

Take Bug 1962829, for example, a recent incident involving Microsoft PKI Services. “A typo” introduced during a CPS revision misstated the presence of the keyEncipherment bit in some certificates. The error made it through publication and multiple reviews, even as millions of certificates were issued under a document that contradicted actual practice.

The result? Distrust risks, revocation discussions, and a prolonged, public investigation.

The Microsoft incident reveals a deeper problem, CAs that lack proper automation between their documented policies and actual certificate issuance. This wasn’t just a documentation error, it exposed the absence of systems that would automatically catch such discrepancies before millions of certificates were issued under incorrect policies.

This isn’t an isolated case. CP and CPS “drift” from actual practices has played a role in many other compliance failures and trust decisions. This post discusses CA distrust and misissuance due to CP or CPS not matching observable reality is certainly a common factor.

Accuracy Is Non-Negotiable

Some voices in the ecosystem now suggest that when a CPS is discovered to be wrong, the answer is simply to patch the document retroactively and move on. This confirms what I have said for ages, too many CAs want the easy way out, patching documents after problems surface rather than investing in the automation and processes needed to prevent mismatches in the first place.

That approach guts the very purpose of a CPS. Making it easier for CAs to violate their commitments creates perverse incentives to avoid investing in proper compliance infrastructure.

Accountability disappears if a CA can quietly “fix” its promises after issuance. Audits lose meaning because the baseline keeps shifting. Relying-party trust erodes the moment documentation no longer reflects observable reality.

A CPS must be written by people who understand the CA’s actual issuance flow. It must be updated in lock-step with code and operational changes. And it must be amended before new types of certificates are issued. Anything less turns it into useless marketing fluff.

Make the Document Earn Its Keep

Treat the CPS as a living contract:

- Write it in plain language that every audience can parse.

- Tie it directly to automated linting so profile deviations are caught before issuance. Good automation makes policy violations nearly impossible; without it, even simple typos can lead to massive compliance failures.

- Publish all historical versions so the version details in the document are obvious and auditable. Better yet, maintain CPS documents in a public git repository with markdown versions that make change history transparent and machine-readable.

- Run every operational change through a policy-impact checklist before it reaches production.

If you expect others to trust your certificates, your public documentation must prove you deserve that trust. Done right, a CPS is one of the strongest signals of a CA’s competence and professionalism. Done wrong, or patched after the fact, it is worse than useless.

Root programs need to spend time documenting the minimum criteria that these documents must meet. Clear, measurable standards would give CAs concrete targets and make enforcement consistent across the ecosystem. Root programs that tolerate retroactive fixes inadvertently encourage CAs to cut corners on the systems and processes that would prevent these problems entirely.

CAs, meanwhile, need to ask themselves hard questions: Can someone unfamiliar with internal operations use your CPS to accomplish the goals outlined in this post? Can they understand your certificate profiles, validation procedures, and operational commitments without insider knowledge?

More importantly, CAs must design their processes around ensuring these documents are always accurate and up to date. This means implementing testing to verify that documentation actually matches reality, not just hoping it does.

The Bottom Line

CPS documents matter far more than most people think. They are not busywork. They are the public guarantee that a CA knows what it is doing and is willing to stand behind it, in advance, in writing, and in full view of the ecosystem.