Windows has a feature called Automatic Root Update, when CryptoAPI does a chain build, exhausts the locally installed root certificates it downloads (if it has not already done so) a list of certificates it should trust.

This list contains attributes about those certificates (hashes of their subject name and keys, what Microsoft believes it should be trusted for, etc.).

If it finds the certificate it needs in that list it downloads it and installs it.

So how does this technically work?

There is a pre-configured location where Windows looks for this Certificate Trust List (CTL), it is http://www.download.windowsupdate.com/msdownload/update/v3/static/trustedr/en/authrootstl.cab.

NOTE: Technically they do use different language codes in the URLs based on the client local but to the best of my knowledge they all map to the same files today.

This list is downloaded and de-compressed; you can do the same thing on Windows (with curl) like this:

mkdir authroot

cd authroot

curl http://www.download.windowsupdate.com/msdownload/update/v3/static/trustedr/en/authrootstl.cab>authrootstl.cab

expand authrootstl.cab .\authroot.stl

You can open authroot.stl with explorer and look at some of its contents but for the purpose of our exercise that won’t be necessary.

This file is a signed PKCS #7 file, Windows expects this to be signed with key material they own (and protect dearly).

It contains a Microsoft specific ContentInfo structure for CTLs and a few special attributes. WinCrypt.h has the OIDs for these special attributes defined in it but for brevity sake here are the ones you will need for this file:

1.3.6.1.4.1.311.10.1 OID_CTL

1.3.6.1.4.1.311.10.3.9 OID_ROOT_LIST_SIGNER

1.3.6.1.4.1.311.10.11.9 OID_CERT_PROP_ID_METAEKUS

1.3.6.1.4.1.311.10.11.11 CERT_FRIENDLY_NAME_PROP_ID

1.3.6.1.4.1.311.10.11.20 OID_CERT_KEY_IDENTIFIER_PROP_ID

1.3.6.1.4.1.311.10.11.29 OID_CERT_SUBJECT_NAME_MD5_HASH_PROP_ID

1.3.6.1.4.1.311.10.11.83 CERT_ROOT_PROGRAM_CERT_POLICIES_PROP_ID

1.3.6.1.4.1.311.10.11.98 OID_CERT_PROP_ID_PREFIX_98

1.3.6.1.4.1.311.10.11.105 OID_CERT_PROP_ID_PREFIX_105

1.3.6.1.4.1.311.20.2 szOID_ENROLL_CERTTYPE_EXTENSION

1.3.6.1.4.1.311.21.1 szOID_CERTSRV_CA_VERSION

1.3.6.1.4.1.311.60.1.1 OID_ROOT_PROGRAM_FLAGS_BITSTRING

Copy this list of OIDs and constants into a file called authroot.oids and put it where we extracted the STL file.

We can now use the following OpenSSL command to inspect the contents in more detail:

openssl asn1parse -oid authrootstl.oids -in authroot.stl -inform DER

There is lots of stuff in here, but for this exercise we will just download the certificates referenced in this list.

To do that we need to understand which of the attributes are used to construct the URL we will use to download the actual certificate.

This list values we want are just after the OID_ROOT_LIST_SIGNER, the first one being “CDD4EEAE6000AC7F40C3802C171E30148030C072”, its entry will look like this:

SEQUENCE

128:d=8 hl=2 l= 20 prim: OCTET STRING [HEX DUMP]:CDD4EEAE6000AC7F40C3802C171E30148030C072

150:d=8 hl=3 l= 246 cons: SET

153:d=9 hl=2 l= 30 cons: SEQUENCE

155:d=10 hl=2 l= 10 prim: OBJECT :OID_CERT_PROP_ID_PREFIX_105

167:d=10 hl=2 l= 16 cons: SET

169:d=11 hl=2 l= 14 prim: OCTET STRING [HEX DUMP]:300C060A2B0601040182373C0302

185:d=9 hl=2 l= 32 cons: SEQUENCE

187:d=10 hl=2 l= 10 prim: OBJECT :OID_CERT_SUBJECT_NAME_MD5_HASH_PROP_ID

199:d=10 hl=2 l= 18 cons: SET

201:d=11 hl=2 l= 16 prim: OCTET STRING [HEX DUMP]:F0C402F0404EA9ADBF25A03DDF2CA6FA

219:d=9 hl=2 l= 36 cons: SEQUENCE

221:d=10 hl=2 l= 10 prim: OBJECT :OID_CERT_KEY_IDENTIFIER_PROP_ID

233:d=10 hl=2 l= 22 cons: SET

235:d=11 hl=2 l= 20 prim: OCTET STRING [HEX DUMP]:0EAC826040562797E52513FC2AE10A539559E4A4

257:d=9 hl=2 l= 48 cons: SEQUENCE

259:d=10 hl=2 l= 10 prim: OBJECT :OID_CERT_PROP_ID_PREFIX_98

271:d=10 hl=2 l= 34 cons: SET

273:d=11 hl=2 l= 32 prim: OCTET STRING [HEX DUMP]:885DE64C340E3EA70658F01E1145F957FCDA27AABEEA1AB9FAA9FDB0102D4077

307:d=9 hl=2 l= 90 cons: SEQUENCE

309:d=10 hl=2 l= 10 prim: OBJECT :CERT_FRIENDLY_NAME_PROP_ID

321:d=10 hl=2 l= 76 cons: SET

323:d=11 hl=2 l= 74 prim: OCTET STRING [HEX DUMP]:4D006900630072006F0073006F0066007400200052006F006F007400200043006500720074006900660069006300610074006500200041007500740068006F0072006900740079000000

We can download this certificate with the following command:

curl http://www.download.windowsupdate.com/msdownload/update/v3/static/trustedr/en/CDD4EEAE6000AC7F40C3802C171E30148030C072.crt> CDD4EEAE6000AC7F40C3802C171E30148030C072.crt

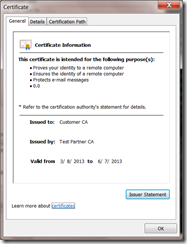

If we look at the contents of the certificate we can see this is the 4096 bit “Microsoft Root Certificate Authority”.

The next in the list is 245C97DF7514E7CF2DF8BE72AE957B9E04741E85, and then the next is 18F7C1FCC3090203FD5BAA2F861A754976C8DD25 and so forth.

We could go through and parse the ASN.1 to get these values, iterate on them and download them all if we wanted. Of course if we wanted to get all of the root certificates we might as well just download the most recent root update here: http://www.microsoft.com/en-us/download/details.aspx?id=29434

It contains the same information just in a self-contained format (this is whats used by down-level clients that do not have Automatic Root Update).

Anyhow hopefully this will be useful for someone,

Ryan

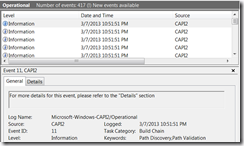

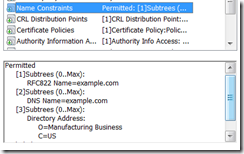

![clip_image001[6] clip_image001[6]](http://unmitigatedrisk.com/wp-content/uploads/2013/03/clip_image0016_thumb.png)

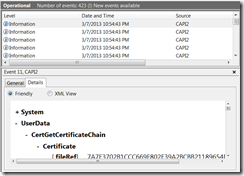

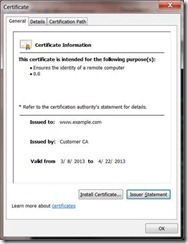

![clip_image002[6] clip_image002[6]](http://unmitigatedrisk.com/wp-content/uploads/2013/03/clip_image0026_thumb.png)

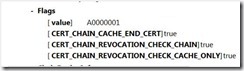

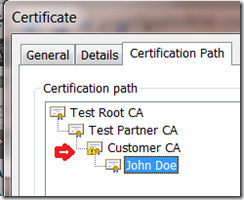

![clip_image003[6] clip_image003[6]](http://unmitigatedrisk.com/wp-content/uploads/2013/03/clip_image0036_thumb.png)