This morning I saw a number of posts on Twitter about Flame and the attacks use of a collision attack against MD5.

This flurry of posts was brought on about by Venafi, they have good tools for enterprises for assessing what certificates they have in their environments, what algorithms are used, when the certificates expire, etc. These tools are also part of their suite used for certificate management.

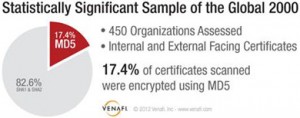

They published some statistics on the usage of MD5, specifically they say they see MD5 in 17.4% of the certificates seen by their assessment tools. Their assessment tool can be thought of as a combination of nmap and sslyze with a reporting module.

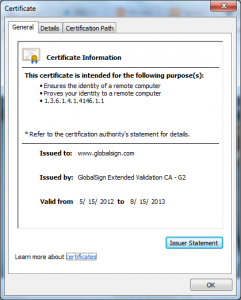

Based on this we can assume the certificates they found are limited to SSL certificates, this by itself is interesting but not indicative of being vulnerable to the same attack that was used by Flame in this case.

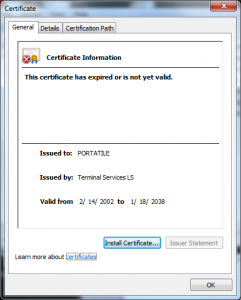

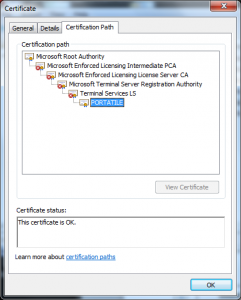

Don’t get me wrong Microsoft absolutely should not have been issuing certificates signed using MD5 but the collision was not caused (at least exclusively) by their use of MD5; it was a union of:

- Use of non-random serial numbers

- Use of 512 bit RSA keys

- Use of MD5 as a hashing algorithm

- Poorly thought out certificate profiles

If any one of these things changed the attack would have become more difficult, additionally if they had their PKI thought out well the only thing at risk would have been their license revenue.

If it was strictly about MD5 Microsoft’s announcement the other day about blocking RSA keys smaller than 1024 bit would have also included MD5 – but it did not.

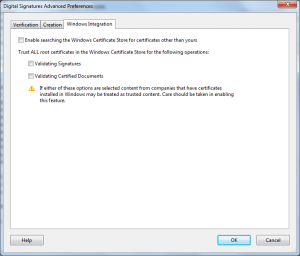

So what does this mean for you? Well you shouldn’t be using MD5 and if you are you should stop and question your vendors who are sending you down the path of doing so but you also need to take a holistic look at your use of PKI and make sure you are actually using best practices (key length, serial numbers, etc). With that said the sky is not falling, walk don’t run to the nearest fire escape.

Ryan